filmov

tv

SUPERCHARGED Python Functions!! #python #programming #coding

Показать описание

Background Music:

Creative Commons / Attribution 3.0 Unported License (CC BY 3.0)

SUPERCHARGED Python Functions!! #python #programming #coding

Supercharged python functions python programming coding

When you Over Optimize a Python Function

Supercharge Python Functions!! #python #programming #coding #learnwithpratap

Functions in Python are easy 📞

Python super function 🦸

Different Approach to Define Function in Python - Python Short Series Ep. 110 #python #coding

How to Supercharge Your Python Code: Demystifying Functions!

Yes, You Can Do THIS With Functions In Python

I NEVER Knew THIS Python Function Existed Before...

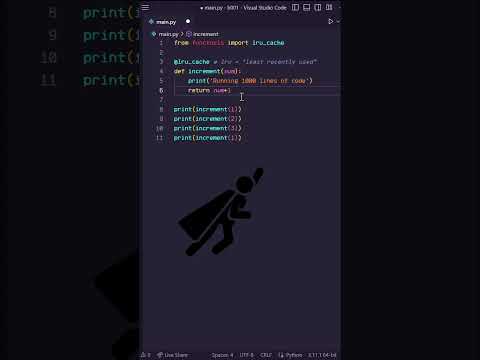

Python 3 - Supercharge your functions with the cache decorator!

Make python code BLAZINGLY fast with this decorator!

Super Useful Function in Python #python #coding #programming

Have you ever used this #python function?

python functions #python #programming #coding

Intermediate Python Tutorial #3 - Map() Function

How can decorators add functionality in Python? Supercharge Python #functions with Decorators!

one of my favourite python functions | #shorts

Python Hack: Supercharge Your Code with Lambda Functions in Map Function

Python Function Trick! #shorts

Hidden Functions of Python: any() and all()

What is Scope in Python??

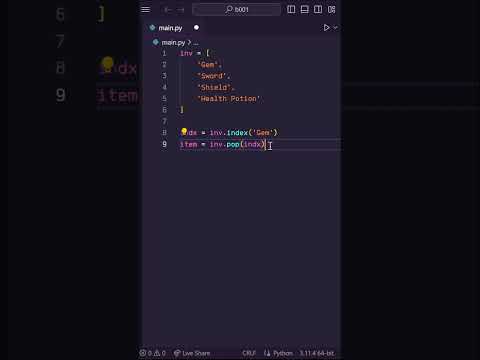

Python List Organization!! #python #programming #coding

Flow in Prefect - Supercharge Your Data Pipeline in Python

Комментарии

0:01:00

0:01:00

0:13:50

0:13:50

0:01:00

0:01:00

0:00:53

0:00:53

0:10:38

0:10:38

0:04:45

0:04:45

0:01:00

0:01:00

0:00:54

0:00:54

0:00:42

0:00:42

0:00:47

0:00:47

0:09:14

0:09:14

0:00:39

0:00:39

0:01:00

0:01:00

0:00:37

0:00:37

0:01:01

0:01:01

0:06:00

0:06:00

0:00:28

0:00:28

0:00:29

0:00:29

0:00:44

0:00:44

0:00:36

0:00:36

0:00:36

0:00:36

0:10:25

0:10:25

0:00:54

0:00:54

0:01:37

0:01:37