filmov

tv

This is why understanding database concurrency control is important

Показать описание

the two articles I found

What is a database in under 4 minutes

Learn What is Database | Types of Database | DBMS

What is Database & Database Management System DBMS | Intro to DBMS

Database Tutorial for Beginners

What is a Relational Database?

Database vs Data Warehouse vs Data Lake | What is the Difference?

What is database | DBMS | Lec-2 | Bhanu Priya

Learn Database Normalization - 1NF, 2NF, 3NF, 4NF, 5NF

Day 24 | SQL in Data Science - Explained! @Nxtivia #100dayschallenge #datascience

SQL Server Tutorial For Beginners | SQL Server: Understanding Database Fundamentals | Simplilearn

Introduction To DBMS - Database Management System | What Is DBMS? | DBMS Explanation | Simplilearn

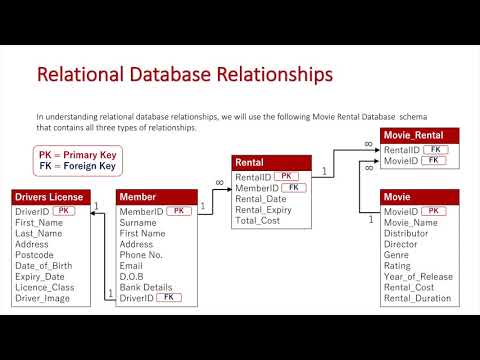

Relational Database Relationships (Updated)

What is a database schema?

What Is Database Management System ? | What Is DBMS ?

What is a Graph Database?

Database vs Spreadsheet - Advantages and Disadvantages

Database Design Course - Learn how to design and plan a database for beginners

What Is SQL? | SQL Explained in 2 Minutes | What Is SQL Database? | SQL For Beginners |Simplilearn

Understand Database Security Concepts

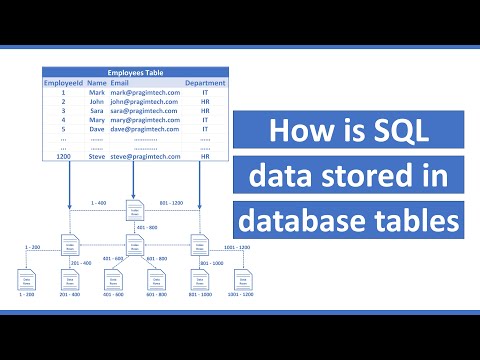

How is data stored in sql database

What is Normalization in SQL, and Why is it Important ? | Database Management System | DBMS #shorts

Basic Concept of Database Normalization - Simple Explanation for Beginners

Database Sharting Explained

Lec 1: Introduction to DBMS | Database Management System

Комментарии

0:03:47

0:03:47

0:12:11

0:12:11

0:03:55

0:03:55

0:05:32

0:05:32

0:07:54

0:07:54

0:05:22

0:05:22

0:05:38

0:05:38

0:28:34

0:28:34

0:00:40

0:00:40

0:25:17

0:25:17

0:17:06

0:17:06

0:06:19

0:06:19

0:04:46

0:04:46

0:05:17

0:05:17

0:03:56

0:03:56

0:07:06

0:07:06

8:07:20

8:07:20

0:01:27

0:01:27

0:27:17

0:27:17

0:07:04

0:07:04

0:00:57

0:00:57

0:08:11

0:08:11

0:00:32

0:00:32

0:22:21

0:22:21