filmov

tv

Why some speakers and amps exceed 20kHz

Показать описание

If the limits of human hearing is 20kHz, why would speaker and amp manufacturers concern themselves with exceeding those limitations on frequency?

A SIMPLE Rule For Choosing An Amplifier | Ohms, Watts, & More

How much power do your speakers need? | Crutchfield

How to match amps to speakers

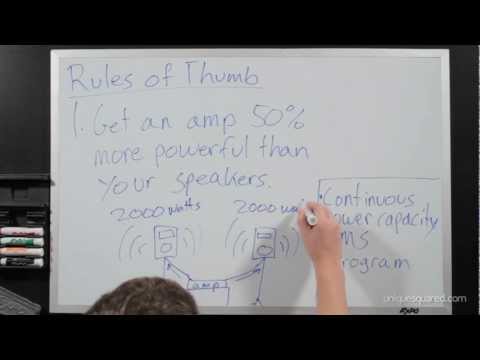

Amplifier to Speaker Matching Tutorial | UniqueSquared.com

Receivers vs Amplifiers! Everything you need to know!

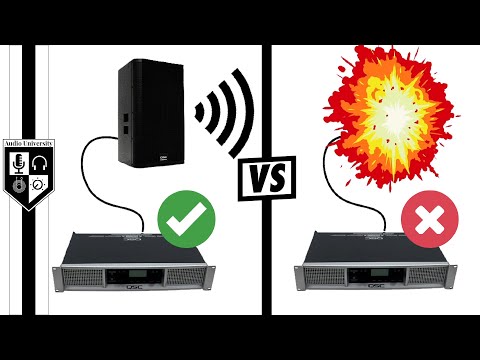

WARNING: Breaking These Rules Could Destroy Your Amplifier & Speakers!

Active vs Passive Speakers | Do You Need An Amplifier?

How to match amps to speakers

Vandersteen and B&K Monoblocks #speakers #music #jazz #audiofilo #hifi #audiophile #amplifiers

How to choose a car amplifier | Crutchfield

Why You Should Use A Powerful Amp For Your Speakers

3 Reasons You Need An Amplifier

Bi-Wiring & Bi-Amping Explained | What is it? How do you do it? Is it worth it? Let's talk ...

Amplifiers: General | Car Audio 101

What's the difference between amplifier classes? | Crutchfield

Speaker Impedance (Ohms) and How to Match Amps w/ Speakers

How To Pair Speaker Cabinets & Guitar Amps (Without Blowing Anything Up!)

Use an Amplifier with Twice the Power of Your Speakers?!

Sound bar mini amplifier testing, 48 Million Views!

The Hidden Costs of CHEAP AMPS!

How To Set Up An Amplifier [Bridge vs Parallel vs Stereo]

Wire Ferrules Tutorial #shorts #caraudio #bass #installation #subwoofer #amplifier

Car Audio on a Budget? What should you upgrade first and last for YOUR SYSTEM?

Ultimate Guide to Passive Speaker - Choosing the Right Amplifier | Kanto YU Passive 4' & 5....

Комментарии

0:04:20

0:04:20

0:03:40

0:03:40

0:05:12

0:05:12

0:08:53

0:08:53

0:05:03

0:05:03

0:06:53

0:06:53

0:03:31

0:03:31

0:04:30

0:04:30

0:00:15

0:00:15

0:09:41

0:09:41

0:00:22

0:00:22

0:00:16

0:00:16

0:07:18

0:07:18

0:02:42

0:02:42

0:05:44

0:05:44

0:10:33

0:10:33

0:09:08

0:09:08

0:00:30

0:00:30

0:00:15

0:00:15

0:21:44

0:21:44

0:07:04

0:07:04

0:00:30

0:00:30

0:09:23

0:09:23

0:09:25

0:09:25