filmov

tv

Piotr Bojanowski: Unsupervised methods for deep learning and applications

Показать описание

Abstract:

Modern machine learning algorithms require large amounts of supervised data to train. Successful computer vision models such as Mask-RCNN require 80k MS-COCO images, but strongly rely on pre-training networks on 1.2M ImageNet images.

Obtaining reliable annotations is very costly. As long as the label set corresponds to objective properties such as facial landmark locations the annotation procedure is relatively easy. For more conceptual labels such as human actions in video, the process is illy defined and very time consuming. Finally annotations are even more demanding for expert domains such as law or medicine requiring highly skilled professionals in the loop.

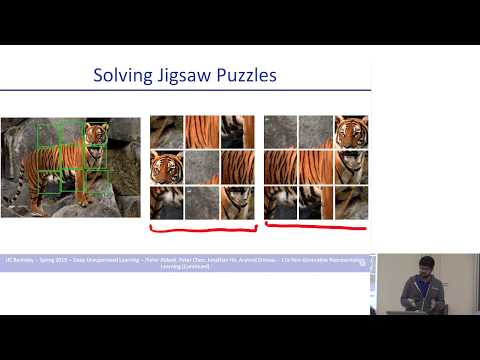

In the absence of large supervised datasets like ImageNet, pre-training models using unsupervised learning algorithms can be beneficial. Such algorithms allow to encode priors about the data, making the training of complex models easier. Unsupervised pre training of neural networks has received quite a lot of interest in the mid 2000s, allowing to overcome data scarcity at that time.

In this talk, I will go over some of our contributions in this context. In the first part of this talk, I will discuss unsupervised learning methods for natural language processing. This includes character-level language modeling and character-based word vector representations. In a second part, I will present unsupervised models for computer vision that we designed to train convolutional neural networks without using labels.

Комментарии

1:16:31

1:16:31

1:12:46

1:12:46

1:03:21

1:03:21

0:13:22

0:13:22

0:09:41

0:09:41

0:42:39

0:42:39

0:32:26

0:32:26

0:10:33

0:10:33

1:27:37

1:27:37

0:16:13

0:16:13

2:11:11

2:11:11

0:45:31

0:45:31

0:03:25

0:03:25

0:09:12

0:09:12

0:05:31

0:05:31

1:49:19

1:49:19

1:34:29

1:34:29

0:12:35

0:12:35

0:04:56

0:04:56

0:35:52

0:35:52

0:04:08

0:04:08

0:18:47

0:18:47

0:42:57

0:42:57

0:29:06

0:29:06