filmov

tv

Lecture 14: Simplified Attention Mechanism - Coded from scratch in Python | No trainable weights

Показать описание

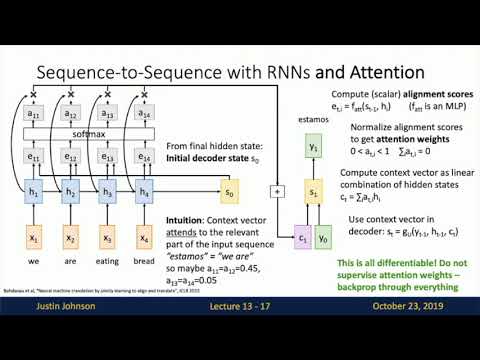

In this lecture, we code a simplified attention mechanism from scratch, in Python. In the process, we learn about context vectors, attention scores and attention weights. We pay equal attention to theory, visual intuition and code.

0:00 Lecture objective

2:29 Context vectors

9:34 Coding embedding vectors in Python

14:45 What are attention scores?

19:18 Dot product and attention scores

22:57 Coding attention scores in Python

26:22 Simple normalisation

34:07 Softmax normalisation

37:34 Coding attention weights in Python

43:46 Context vector calculation visualised

50:19 Coding context vectors in Python

55:29 Coding attention score matrix for all queries

01:00:22 Coding attention weight matrix for all queries

01:04:27 Coding context vector matrix for all queries

01:14:10 Need for trainable weights in the attention mechanism

=================================================

=================================================

Vizuara philosophy:

As we learn AI/ML/DL the material, we will share thoughts on what is actually useful in industry and what has become irrelevant. We will also share a lot of information on which subject contains open areas of research. Interested students can also start their research journey there.

Students who are confused or stuck in their ML journey, maybe courses and offline videos are not inspiring enough. What might inspire you is if you see someone else learning and implementing machine learning from scratch.

No cost. No hidden charges. Pure old school teaching and learning.

=================================================

🌟 Meet Our Team: 🌟

🎓 Dr. Raj Dandekar (MIT PhD, IIT Madras department topper)

🎓 Dr. Rajat Dandekar (Purdue PhD, IIT Madras department gold medalist)

🎓 Dr. Sreedath Panat (MIT PhD, IIT Madras department gold medalist)

🎓 Sahil Pocker (Machine Learning Engineer at Vizuara)

🎓 Abhijeet Singh (Software Developer at Vizuara, GSOC 24, SOB 23)

🎓 Sourav Jana (Software Developer at Vizuara)

0:00 Lecture objective

2:29 Context vectors

9:34 Coding embedding vectors in Python

14:45 What are attention scores?

19:18 Dot product and attention scores

22:57 Coding attention scores in Python

26:22 Simple normalisation

34:07 Softmax normalisation

37:34 Coding attention weights in Python

43:46 Context vector calculation visualised

50:19 Coding context vectors in Python

55:29 Coding attention score matrix for all queries

01:00:22 Coding attention weight matrix for all queries

01:04:27 Coding context vector matrix for all queries

01:14:10 Need for trainable weights in the attention mechanism

=================================================

=================================================

Vizuara philosophy:

As we learn AI/ML/DL the material, we will share thoughts on what is actually useful in industry and what has become irrelevant. We will also share a lot of information on which subject contains open areas of research. Interested students can also start their research journey there.

Students who are confused or stuck in their ML journey, maybe courses and offline videos are not inspiring enough. What might inspire you is if you see someone else learning and implementing machine learning from scratch.

No cost. No hidden charges. Pure old school teaching and learning.

=================================================

🌟 Meet Our Team: 🌟

🎓 Dr. Raj Dandekar (MIT PhD, IIT Madras department topper)

🎓 Dr. Rajat Dandekar (Purdue PhD, IIT Madras department gold medalist)

🎓 Dr. Sreedath Panat (MIT PhD, IIT Madras department gold medalist)

🎓 Sahil Pocker (Machine Learning Engineer at Vizuara)

🎓 Abhijeet Singh (Software Developer at Vizuara, GSOC 24, SOB 23)

🎓 Sourav Jana (Software Developer at Vizuara)

Комментарии