filmov

tv

Information theory and coding || part-6 || example - entropy and mutual entropy

Показать описание

information theory and coding

Why Information Theory is Important - Computerphile

What is information theory? | Journey into information theory | Computer Science | Khan Academy

Information Theory Basics

Information Theory, Lecture 1: Defining Entropy and Information - Oxford Mathematics 3rd Yr Lecture

Claude Shannon Explains Information Theory

Definition of a 'bit', in information theory

The Story of Information Theory: from Morse to Shannon to ENTROPY

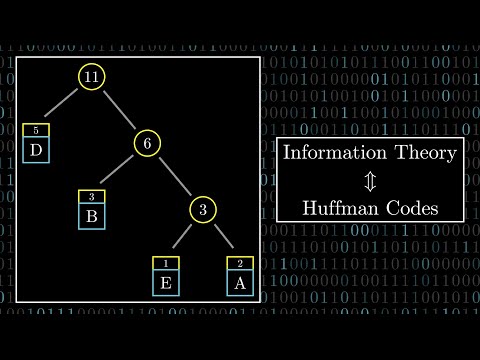

Huffman Codes: An Information Theory Perspective

Basics of Information Theory | Information Theory and Coding

The Most Important (and Surprising) Result from Information Theory

L2: Information Theory Coding | Uncertainty, Properties of Information with Proofs | ITC Lectures

Solving Wordle using information theory

Information Theory: Introduction to Coding

L 1 | Part 1 | Introduction to Information | Information Theory & Coding | Digital Communication...

Convolution Codes | Information Theory and Coding

ITC | INFORMATION THEORY AND CODING | KTU | S6 ECE | ECT306 | 2019 SCHEME | BEST CLASS IN 2025

What's Information Theory?

Information Theory

Information (Basics, Definition, Uncertainty & Property) Explained in Digital Communication

What are Channel Capacity and Code Rate?

Huffman Coding | Lecture 6| Information Theory & Coding Technique| ITCCN

Stanford Seminar - Information Theory of Deep Learning, Naftali Tishby

Communication 15 | Information Theory & Coding - Episode 1 | GATE Crash Course Electronic

Markov sources part1

Комментарии

0:12:33

0:12:33

0:03:26

0:03:26

0:16:22

0:16:22

0:53:46

0:53:46

0:02:18

0:02:18

0:01:00

0:01:00

0:41:15

0:41:15

0:29:11

0:29:11

0:01:05

0:01:05

0:09:10

0:09:10

0:25:16

0:25:16

0:30:38

0:30:38

0:05:57

0:05:57

0:07:03

0:07:03

0:00:42

0:00:42

1:46:16

1:46:16

0:00:58

0:00:58

0:00:25

0:00:25

0:16:58

0:16:58

0:18:16

0:18:16

0:13:59

0:13:59

1:24:44

1:24:44

0:59:18

0:59:18

0:16:27

0:16:27