filmov

tv

Task-parallel computing: Samuel's tutorial

Показать описание

Samuel's tutorial for task-parallel computing (history, analysis and implementation).

Timestamps:

00:00 - Task-Parallel Computing: Samuel's tutorial

00:38 - Moore's Law

01:42 - Moore's Law - Historical Data

03:01 - Dennard Scaling

04:28 - The End Of Dennard Scaling

06:27 - Amdahl's Law

08:35 - Gustafon's Law

10:27 - Memory Models For Parallel Computing

11:23 - Shared Memory Variants

12:37 - Forms Of Parallelism

13:18 - Task-Parallel Platforms

14:04 - Fork-Join Parallelism

15:32 - An Example: Fibonacci

16:54 - Parallel Code

18:26 - Computation DAG

19:43 - Parallel Computation Analysis: Assumptions

20:30 - Work/Span Analysis

23:02 - Parallel Analysis

Detailed description:

We start with a description of key historical trends that underpin parallel computing, beginning with Moore's Law and its implications for transistor development. Next, we discuss how Dennard Scaling enabled remarkable improvements in single-core performance for several decades, but ultimately broke down in around 2005.

We then turn to Amdahl's Law, which describes the limits to how much speedup can be achieved for a given problem, and Gustafson's Law, which describes how the availability of extra processing power often changes the nature of the problem itself.

Next, we discuss memory models for parallel computing: shared memory and distributed memory. Some details are given on shared memory architectures (SMP/UMA and DSM/NUMA).

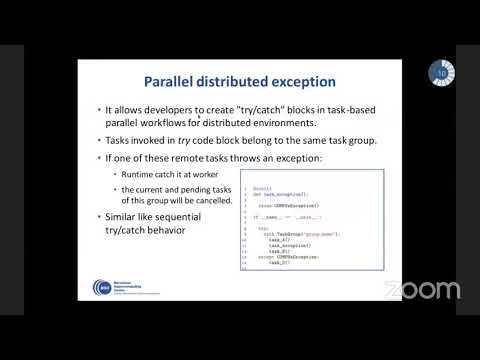

We describe data-level parallelism and task-level parallelism, and the existence of task-parallel platforms that support the latter approach. Next comes fork-join parallelism, a simple model for implementing parallel programs, and an example of how it can be used to implement parallel recursion.

To perform analysis of parallel programs, we introduce computation DAGs and describe several assumptions (ideal parallel computer, processors have equal power, no overhead for scheduling) that underpin our analysis. We describe the concepts of work and span. Finally, we show how to use these concepts to perform parallel analysis.

Topics: #parallel #work #span

References for papers mentioned in the video can be found at

For related content:

Timestamps:

00:00 - Task-Parallel Computing: Samuel's tutorial

00:38 - Moore's Law

01:42 - Moore's Law - Historical Data

03:01 - Dennard Scaling

04:28 - The End Of Dennard Scaling

06:27 - Amdahl's Law

08:35 - Gustafon's Law

10:27 - Memory Models For Parallel Computing

11:23 - Shared Memory Variants

12:37 - Forms Of Parallelism

13:18 - Task-Parallel Platforms

14:04 - Fork-Join Parallelism

15:32 - An Example: Fibonacci

16:54 - Parallel Code

18:26 - Computation DAG

19:43 - Parallel Computation Analysis: Assumptions

20:30 - Work/Span Analysis

23:02 - Parallel Analysis

Detailed description:

We start with a description of key historical trends that underpin parallel computing, beginning with Moore's Law and its implications for transistor development. Next, we discuss how Dennard Scaling enabled remarkable improvements in single-core performance for several decades, but ultimately broke down in around 2005.

We then turn to Amdahl's Law, which describes the limits to how much speedup can be achieved for a given problem, and Gustafson's Law, which describes how the availability of extra processing power often changes the nature of the problem itself.

Next, we discuss memory models for parallel computing: shared memory and distributed memory. Some details are given on shared memory architectures (SMP/UMA and DSM/NUMA).

We describe data-level parallelism and task-level parallelism, and the existence of task-parallel platforms that support the latter approach. Next comes fork-join parallelism, a simple model for implementing parallel programs, and an example of how it can be used to implement parallel recursion.

To perform analysis of parallel programs, we introduce computation DAGs and describe several assumptions (ideal parallel computer, processors have equal power, no overhead for scheduling) that underpin our analysis. We describe the concepts of work and span. Finally, we show how to use these concepts to perform parallel analysis.

Topics: #parallel #work #span

References for papers mentioned in the video can be found at

For related content:

0:21:57

0:21:57

0:32:24

0:32:24

1:00:26

1:00:26

0:12:31

0:12:31

0:55:49

0:55:49

0:05:25

0:05:25

0:09:01

0:09:01

0:18:09

0:18:09

0:48:23

0:48:23

0:00:38

0:00:38

0:11:07

0:11:07

0:01:46

0:01:46

1:17:02

1:17:02

0:01:35

0:01:35

0:05:17

0:05:17

0:26:46

0:26:46

0:17:22

0:17:22

0:43:29

0:43:29

2:00:02

2:00:02

0:16:01

0:16:01

0:09:27

0:09:27

0:52:48

0:52:48

0:13:12

0:13:12

0:59:57

0:59:57