filmov

tv

Multi-Label Classification on Unhealthy Comments - Finetuning RoBERTa with PyTorch - Coding Tutorial

Показать описание

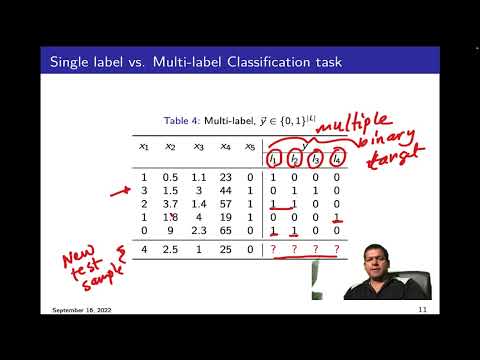

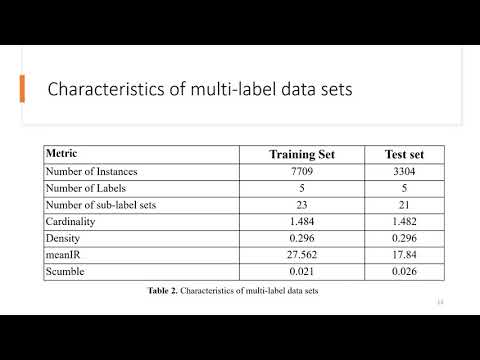

A practical Python Coding Guide - In this guide I train RoBERTa using PyTorch Lightning on a Multi-label classification task. In particular the unhealthy comment corpus - this creates a language model that can classify whether an online comment contains attributes such as sarcasm, hostility or dismissiveness.

---- TUTORIAL NOTEBOOK

remember to press copy to drive to save a copy of the notebook for yourself

Intro: 00:00:00

Video / project outline: 00:00:27

Getting Google Colab set up: 00:02:00

Imports: 00:03:23

Inspect data: 00:07:05

Pytorch dataset: 00:11:15

Pytorch lightning data module: 00:27:08

Creating the model / classifier: 00:35:45

Training and evaluating model: 01:07:30

This series attempts to offer a casual guide to Hugging Face and Transformer models focused on implementation rather than theory. Let me know if you enjoy them! Will be doing future videos on computer vision if that is something people are interested in, let me know in the comments :)

----- Research material for theory

---- TUTORIAL NOTEBOOK

remember to press copy to drive to save a copy of the notebook for yourself

Intro: 00:00:00

Video / project outline: 00:00:27

Getting Google Colab set up: 00:02:00

Imports: 00:03:23

Inspect data: 00:07:05

Pytorch dataset: 00:11:15

Pytorch lightning data module: 00:27:08

Creating the model / classifier: 00:35:45

Training and evaluating model: 01:07:30

This series attempts to offer a casual guide to Hugging Face and Transformer models focused on implementation rather than theory. Let me know if you enjoy them! Will be doing future videos on computer vision if that is something people are interested in, let me know in the comments :)

----- Research material for theory

Multi-Label Classification on Unhealthy Comments - Finetuning RoBERTa with PyTorch - Coding Tutorial

MULTI-LABEL TEXT CLASSIFICATION USING 🤗 BERT AND PYTORCH | BERT Longformer MODEL

Multi-label classification of Foods with DenseNet using keras with python

Multi-Label Classification Accuracy Made Easy: A Step-by-Step Tutorial

MULTI-LABEL TEXT CLASSIFICATION USING 🤗 BERT AND PYTORCH | BERT ROBERTA MODEL

Multi-label Classification of PubMed Articles

Fine-Tuning BERT with HuggingFace and PyTorch Lightning for Multilabel Text Classification | Dataset

MULTI-LABEL TEXT CLASSIFICATION USING 🤗 BERT AND PYTORCH

PLM Partial Label Masking for Imbalanced Multi label Classification

Multilabel Toxic Comment Detection and Classification

Muliclass Multilabel Classification with python | Machine Learning | Data Magic AI

Hierarchical Multi-Label Classification System using Support Vector Machine

Deep Learning in Medical Imaging: Multi-label Classification with PyTorch | Hands-on Demo

Multi-label classification

Multi-label classification

MULTI-LABEL TEXT CLASSIFICATION USING 🤗 BERT AND PYTORCH | BERT BASE UNCASED MODEL

Multi label text classification In Machine Learning - 3

Paper ID 12 - Multi label classification of feedbacks

Create Multi-label Image Classifier in 1 Notebook 4 Minutes

Predicting multilabel probabilities - MLPClassifier

Toxic Comment Classifier Using Naive Bayes and LSTM

Multi-label Classification

MULTI-LABEL TEXT CLASSIFICATION USING 🤗 BERT AND PYTORCH | PYTORCH LIGHTNING

Imbalanced Multi-Label Classification | Balanced Weights May Not Improve Your Model Performance

Комментарии

1:16:24

1:16:24

0:09:33

0:09:33

0:00:24

0:00:24

0:06:30

0:06:30

0:16:51

0:16:51

0:47:15

0:47:15

0:37:00

0:37:00

0:49:55

0:49:55

0:18:59

0:18:59

0:22:43

0:22:43

0:11:19

0:11:19

0:04:45

0:04:45

0:07:21

0:07:21

0:50:31

0:50:31

0:01:59

0:01:59

0:08:50

0:08:50

0:16:29

0:16:29

0:14:58

0:14:58

0:04:46

0:04:46

0:22:27

0:22:27

0:04:44

0:04:44

0:04:21

0:04:21

0:32:05

0:32:05

0:10:38

0:10:38