filmov

tv

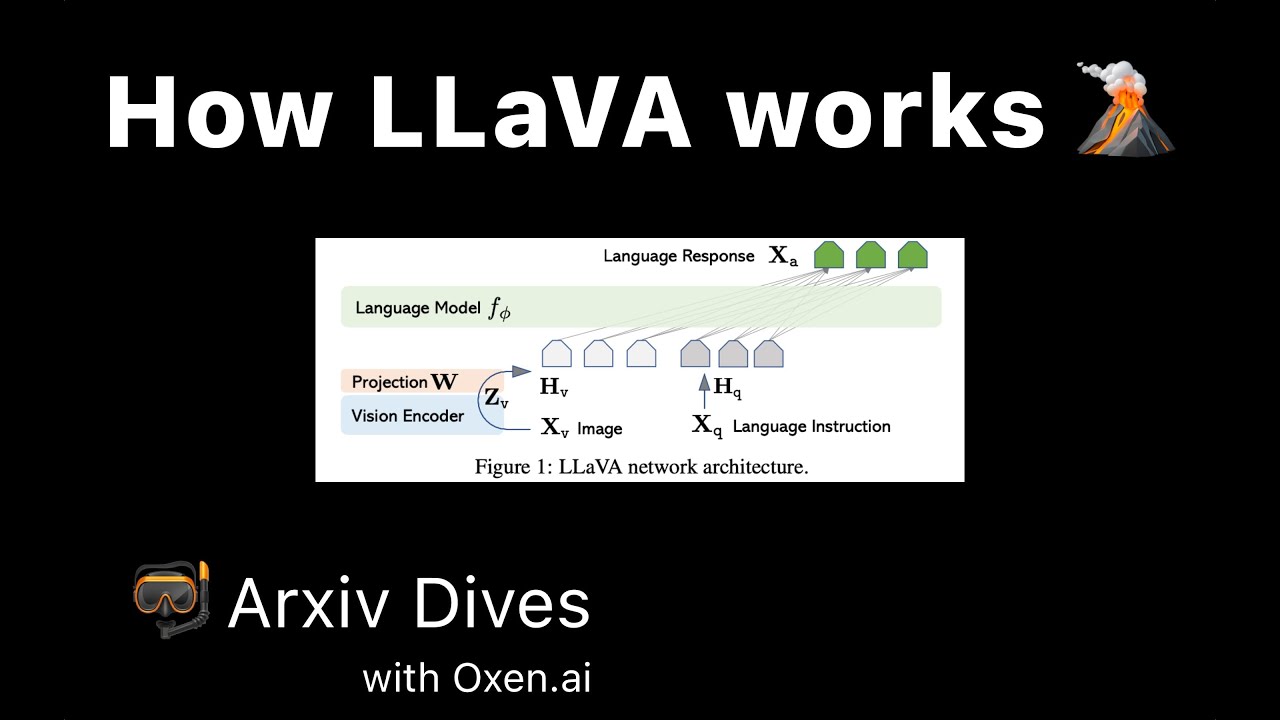

How LLaVA works 🌋 A Multimodal Open Source LLM for image recognition and chat.

Показать описание

Join here:

This week we cover the LLaVA paper which is a multimodal model that combines image recognition with an LLM through a chat like interface, removing the barrier to entry for many computer vision tasks.

How Lava Lamps Work | How Things Work with Kamri Noel

How Lava Lamps Are Made | The Making Of | Insider

How Geologists Collect Lava Samples From Volcanoes

What exactly is the goop inside a lava lamp?

POV Of Geologists Collecting Lava

HOW IT'S MADE: Lava Lamps

Banana thrown into Icelandic volcano lava at Reykjanes. WILL IT SURVIVE?

How Do They Make Lava Lamps?

Sanrio Lava Mooncake Recipe with Taro Ube & Filipino Salted Egg (Itlog na Pula) | Michelle Jeral...

Experiment: LAVA vs BULLETPROOF GLASS

What's Inside A Lava Lamp?

The Most Delicious Lava

Lava vs ice

LAVA 101 INSTRUCTIONAL VIDEO

How to Make a Lava Lamp at Home? | How do Lava Lamps Work? | Science Experiment | Letstute

Experiment: LAVA vs ELECTRIC EELS in Pool

The Lava Lamps That Help Keep The Internet Secure

How Far Volcanologists Go To Test Lava | Science Skills

How Does a Lava Lamp Work #shorts

Baking Soda Lava Lamp | How does it work?

Volcano | The Dr. Binocs Show | Learn Videos For Kids

EXPERIMENT: LAVA vs SHREDDER

What Happens When LAVA Touches DRY ICE!? #Shorts

How to Make a Lava Lamp at Home

Комментарии

0:03:08

0:03:08

0:04:39

0:04:39

0:01:34

0:01:34

0:24:16

0:24:16

0:01:02

0:01:02

0:08:56

0:08:56

0:00:36

0:00:36

0:03:03

0:03:03

0:12:51

0:12:51

0:06:53

0:06:53

0:04:19

0:04:19

0:00:13

0:00:13

0:00:28

0:00:28

0:01:42

0:01:42

0:02:46

0:02:46

0:08:39

0:08:39

0:03:59

0:03:59

0:11:46

0:11:46

0:00:41

0:00:41

0:02:02

0:02:02

0:02:51

0:02:51

0:05:27

0:05:27

0:00:25

0:00:25

0:02:28

0:02:28