filmov

tv

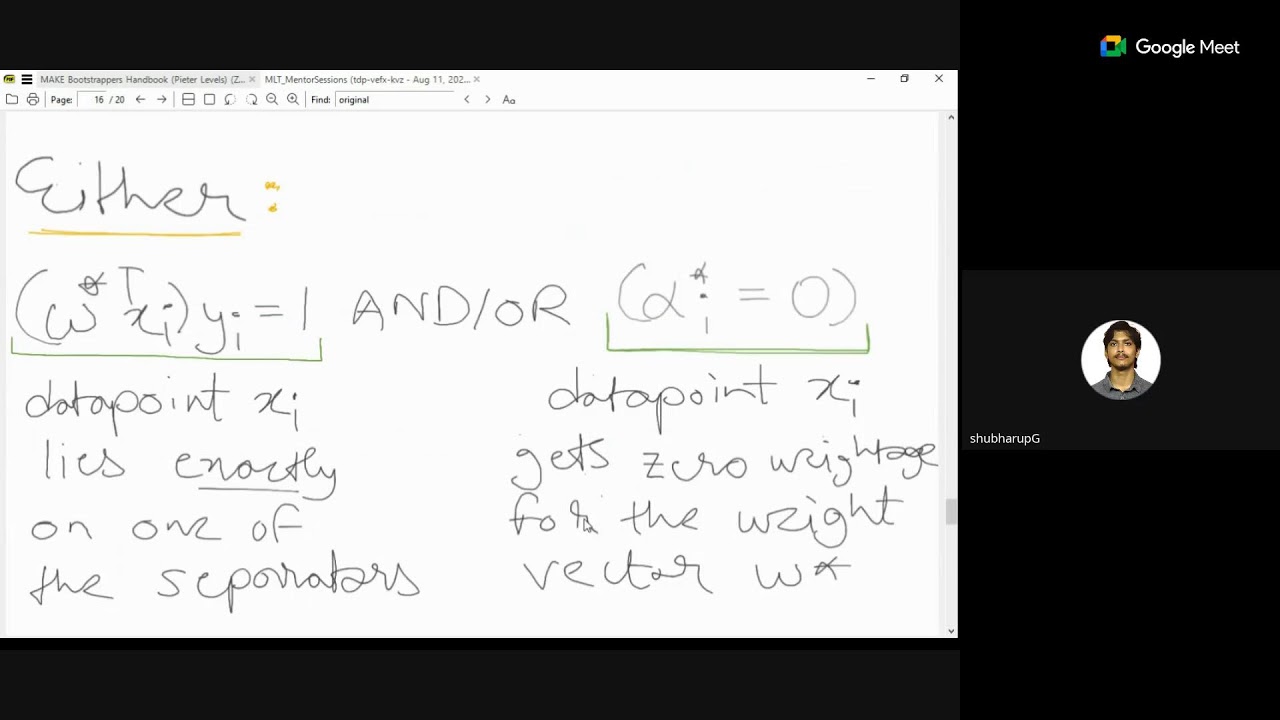

Week-10 | TA Session-1

Показать описание

WORLD CLASS DARTING LINE-UP!!! 🔥 | MODUS Super Series | Series 8 Week 10 | Group A Session 1

Week-10 | Session-1

Week 10 Day 2 // TABATA + BOOTY Building Workout

Trying this trend at 39 weeks pregnant #shorts

Cooku with Comali 5 | 10th & 11th August 2024 - Promo 1

ONE IN A BILLION | Gielinor Games (#10)

|| Result Reaction In Class 10th V/s In Medical College || #mbbs #result #medicalstudent #neet

Week-10-Lesson-1: System Testing

Maths 1 - Open Session - Week 10 (Maths I)

@SeoulInfernal vs @GZCharge | Midseason Madness Qualifiers | Week 10 Day 1

Fast Cut Week 10 | Love Thy Woman

Richard Goodall Sings 'How Am I Supposed To Live Without You' | Quarterfinals | AGT 2024

1 Month (4.2wks) Early Pregnancy Visualization - TVS Ultrasound | it is Possible

10th Planet girls are DIFFERENT 💪🏼 #ufc #bjj #jiujitsu

Week 10 Days 1-3 - Thrower Preseason Strength and Conditioning

I am VTV 2.0 😂🤣 | Cooku with Comali 5 | Episode Preview

Roni Sagi & Rhythm's Dog Dancing WOWS The World! | Quarterfinals | AGT 2024

How to look pretty at school ♡ #aesthetic #teen #glowup #selfcare #school #thatgirl #small

Reaction of my CBSE class 10th result 😮#class10th #boardresult #2023 #cbse #10thresult #reaction

Life of class 10th Students #minivlog

Cooku with Comali 5 | 10th & 11th August 2024 - Promo 4

Best time table class 10th | Timetable for class 10th |10th std time table 2022 |Schedule for 10th

almost the 10th month of Tretinoin || Dryness-peeling||but no more pimples

Pregnancy | Week by Week | Tamil | Week 10 | What to Expect

Комментарии

5:00:57

5:00:57

1:38:45

1:38:45

0:32:34

0:32:34

0:00:13

0:00:13

0:00:25

0:00:25

0:52:36

0:52:36

0:00:27

0:00:27

0:13:25

0:13:25

2:41:16

2:41:16

1:30:29

1:30:29

1:29:26

1:29:26

0:05:09

0:05:09

0:01:57

0:01:57

0:00:39

0:00:39

0:12:30

0:12:30

0:08:28

0:08:28

0:04:44

0:04:44

0:00:20

0:00:20

0:00:22

0:00:22

0:00:27

0:00:27

0:00:25

0:00:25

0:00:41

0:00:41

0:00:22

0:00:22

0:09:39

0:09:39