filmov

tv

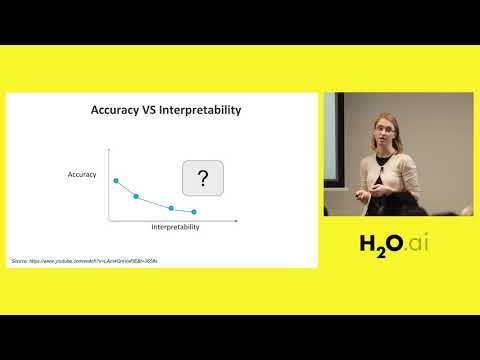

Interpretable Machine Learning Using LIME Framework - Kasia Kulma (PhD), Data Scientist, Aviva

Показать описание

- - -

Kasia discussed complexities of interpreting black-box algorithms and how these may affect some industries. She presented the most popular methods of interpreting Machine Learning classifiers, for example, feature importance or partial dependence plots and Bayesian networks. Finally, she introduced Local Interpretable Model-Agnostic Explanations (LIME) framework for explaining predictions of black-box learners – including text- and image-based models - using breast cancer data as a specific case scenario.

Explainable AI explained! | #3 LIME

Understanding LIME | Explainable AI

Interpretable vs Explainable Machine Learning

Interpretable Machine Learning - Local Interpretable Model-agnostic Explanations (LIME) - Pitfalls

Interpretable Machine Learning - Local Interpretable Model-agnostic Explanations (LIME) - Examples

What is Interpretable Machine Learning - ML Explainability - with Python LIME Shap Tutorial

Interpretable Machine Learning - Local Interpretable Model-agnostic Explanations (LIME) - Intro

SHAP values for beginners | What they mean and their applications

Generative AI and Prompt Engineering | Faculty Development Program (FDP) | Day 12 | 360DigiTMG

❌Explain any Machine Learning Model with LIME ❌NLP Model Interpretability with LIME

Explainable AI, Session 4: Intro to LIME

Interpretable Machine Learning with LIME - How LIME works? 10 Min. Tutorial with Python Code

Interpretable Machine Learning Using LIME Framework - Kasia Kulma (PhD), Data Scientist, Aviva

Interpretable Machine Learning - Local Interpretable Model-agnostic Explanations (LIME) - LIME

How To Interpret The ML Model? Is Your Model Black Box? Lime Library

Interpretable Machine Learning for Image Classification with LIME - 5 Min. Tutorial with Python Code

Episode 65. Explainable AI: LIME - Local Interpretable Model-Agnostic Explanations

Interpreting the Machine Learning algorithm using LIME python package | Viswateja

TidyTuesday: Creating Interpretable Black Box Models using LIME and ALE

ML Model Explainability using LIME

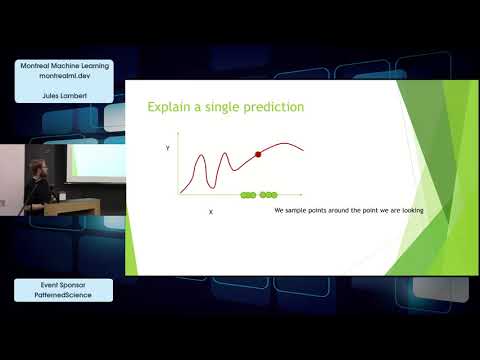

Tutorial on model explanation with LIME - Jules Lambert

Explainable AI in Python with LIME (Ft. Diogo Resende)

Interpretable Machine Learning: Methods for understanding complex models

Interpretable Machine Learning Models

Комментарии

0:13:59

0:13:59

0:14:14

0:14:14

0:07:07

0:07:07

0:15:22

0:15:22

0:10:15

0:10:15

0:41:04

0:41:04

0:14:47

0:14:47

0:07:07

0:07:07

1:12:52

1:12:52

0:13:35

0:13:35

0:09:16

0:09:16

0:10:40

0:10:40

0:12:49

0:12:49

0:11:27

0:11:27

0:05:40

0:05:40

0:07:19

0:07:19

0:16:20

0:16:20

0:29:05

0:29:05

0:30:10

0:30:10

0:26:26

0:26:26

0:17:02

0:17:02

0:26:59

0:26:59

0:11:06

0:11:06