filmov

tv

How to Loop Through URLs with Python Selenium and Print CSS Selector Elements Efficiently

Показать описание

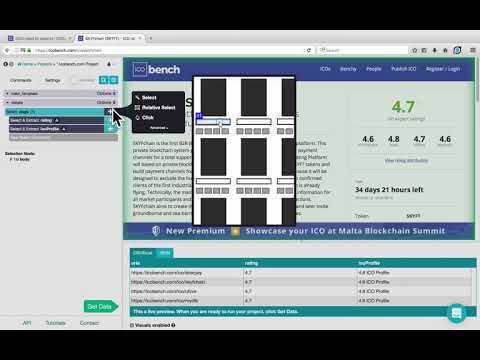

In this guide, we detail how to loop through a list of URLs using Python and Selenium to extract product sales from a website. Learn troubleshooting tips for common errors encountered during the process.

---

Visit these links for original content and any more details, such as alternate solutions, latest updates/developments on topic, comments, revision history etc. For example, the original title of the Question was: How to loop url in array and print css selector element in driver, Python Selenium

If anything seems off to you, please feel free to write me at vlogize [AT] gmail [DOT] com.

---

Looping Through URLs and Extracting Data with Selenium

If you've ever tried to scrape data from multiple webpages using Selenium, you know that it can be challenging. How do you effectively loop through an array of URLs and print out specific data elements without running into errors? In this guide, we’ll walk you through the process of accomplishing that using Python and Selenium.

Understanding the Problem

You want to find the number of sales (or sold items) for a collection of products listed on an e-commerce site. The unique challenge here is that your solution needs to handle multiple URLs in one go and should also be robust enough to manage cases where expected elements might not be present on each page.

The Sample Code

Let's break down the given code snippet that aims to solve this problem. Below is the initial attempt to loop through the product URLs, which returns an output for the sold items:

[[See Video to Reveal this Text or Code Snippet]]

Issues We Encountered

[[See Video to Reveal this Text or Code Snippet]]

This happens because the expected element doesn't exist on one or more of the pages you’re trying to access. The program halts execution when it encounters such an error.

Improving the Solution

Adding Error Handling

To ensure that your program continues executing even if an error occurs, we can add a try-except block around the code that retrieves the element. Here's an improved version of the code:

[[See Video to Reveal this Text or Code Snippet]]

Key Changes Explained

Try-Except Block: This structure allows the program to catch exceptions. If the element is not found, it will print "Error:" followed by the specific error message, allowing you to see where things didn't go as planned without stopping the entire script.

Console Output: You can now track which URLs return valid results and which cause exceptions.

Running the Code

To run the code, make sure that you have the following setup done:

Selenium Installed: Ensure you have the Selenium library installed (pip install selenium).

WebDriver: Download the correct ChromeDriver and ensure it’s compatible with your Chrome browser version.

Modify the Path: Make sure to change /path/to/chromedriver to the actual path where the ChromeDriver is located on your machine.

Conclusion

By implementing this structure, you will create a more resilient web scraping script that can efficiently handle multiple URLs while still managing unexpected issues. The process of checking for each sales count becomes much smoother and less error-prone, ultimately helping you gather more data for analysis.

Stay curious and keep scraping!

---

Visit these links for original content and any more details, such as alternate solutions, latest updates/developments on topic, comments, revision history etc. For example, the original title of the Question was: How to loop url in array and print css selector element in driver, Python Selenium

If anything seems off to you, please feel free to write me at vlogize [AT] gmail [DOT] com.

---

Looping Through URLs and Extracting Data with Selenium

If you've ever tried to scrape data from multiple webpages using Selenium, you know that it can be challenging. How do you effectively loop through an array of URLs and print out specific data elements without running into errors? In this guide, we’ll walk you through the process of accomplishing that using Python and Selenium.

Understanding the Problem

You want to find the number of sales (or sold items) for a collection of products listed on an e-commerce site. The unique challenge here is that your solution needs to handle multiple URLs in one go and should also be robust enough to manage cases where expected elements might not be present on each page.

The Sample Code

Let's break down the given code snippet that aims to solve this problem. Below is the initial attempt to loop through the product URLs, which returns an output for the sold items:

[[See Video to Reveal this Text or Code Snippet]]

Issues We Encountered

[[See Video to Reveal this Text or Code Snippet]]

This happens because the expected element doesn't exist on one or more of the pages you’re trying to access. The program halts execution when it encounters such an error.

Improving the Solution

Adding Error Handling

To ensure that your program continues executing even if an error occurs, we can add a try-except block around the code that retrieves the element. Here's an improved version of the code:

[[See Video to Reveal this Text or Code Snippet]]

Key Changes Explained

Try-Except Block: This structure allows the program to catch exceptions. If the element is not found, it will print "Error:" followed by the specific error message, allowing you to see where things didn't go as planned without stopping the entire script.

Console Output: You can now track which URLs return valid results and which cause exceptions.

Running the Code

To run the code, make sure that you have the following setup done:

Selenium Installed: Ensure you have the Selenium library installed (pip install selenium).

WebDriver: Download the correct ChromeDriver and ensure it’s compatible with your Chrome browser version.

Modify the Path: Make sure to change /path/to/chromedriver to the actual path where the ChromeDriver is located on your machine.

Conclusion

By implementing this structure, you will create a more resilient web scraping script that can efficiently handle multiple URLs while still managing unexpected issues. The process of checking for each sales count becomes much smoother and less error-prone, ultimately helping you gather more data for analysis.

Stay curious and keep scraping!

0:06:32

0:06:32

0:05:54

0:05:54

0:02:19

0:02:19

0:03:12

0:03:12

0:01:34

0:01:34

0:02:00

0:02:00

0:04:39

0:04:39

0:01:47

0:01:47

0:02:09

0:02:09

0:00:23

0:00:23

0:02:03

0:02:03

0:02:24

0:02:24

![Foreach Loop [#27]](https://i.ytimg.com/vi/VZivSipMlKk/hqdefault.jpg) 0:05:21

0:05:21

0:04:26

0:04:26

0:06:17

0:06:17

0:00:26

0:00:26

0:09:21

0:09:21

0:00:52

0:00:52

0:05:17

0:05:17

0:00:36

0:00:36

0:00:09

0:00:09

0:13:11

0:13:11

0:13:08

0:13:08

0:00:14

0:00:14