filmov

tv

How to Easily Remove Duplicate Rows from CSV Files Using Python

Показать описание

Learn how to effectively remove duplicate rows from CSV files using Python and pandas. This step-by-step guide will help you manage your data efficiently and avoid repetition.

---

Visit these links for original content and any more details, such as alternate solutions, latest updates/developments on topic, comments, revision history etc. For example, the original title of the Question was: How to remove duplicate/repeated rows in csv with python?

If anything seems off to you, please feel free to write me at vlogize [AT] gmail [DOT] com.

---

How to Easily Remove Duplicate Rows from CSV Files Using Python

When working with data, especially when scraping from the web or continually appending information to a CSV file, encountering duplicate or repeated rows is a common dilemma. Such duplicates can lead to inaccurate analyses and insights. In this guide, we will explore how to effectively remove these duplicates from CSV files using Python and a popular library called pandas.

The Problem: Handling Duplicate Data

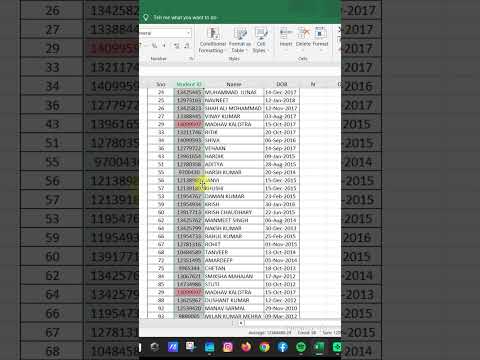

Imagine you have scraped data from various websites, and while saving this data into a CSV file, you accidentally append some duplicate entries. This can create confusion later when analyzing the data or making important decisions based on it. The CSV file might look like this:

[[See Video to Reveal this Text or Code Snippet]]

Why Should You Remove Duplicates?

Accuracy: Having duplicates can skew your data analysis and lead to incorrect conclusions.

Efficiency: Smaller datasets without duplicates are typically easier to manage and analyze.

Clarity: Non-redundant data is simpler for others (and future you) to understand and utilize.

The Solution: Using pandas to Remove Duplicates

pandas is a powerful data manipulation library in Python that simplifies data reading, writing, and analysis. Here’s how you can use it to remove duplicate rows from a CSV file:

Step-by-Step Guide

Install pandas (if you haven't yet):

If you don't have pandas installed, you can do so using pip:

[[See Video to Reveal this Text or Code Snippet]]

Read Your CSV File:

Begin by loading your CSV file into a pandas DataFrame:

[[See Video to Reveal this Text or Code Snippet]]

Remove Duplicates:

There are two main ways to remove duplicates: completely identical rows or partial matches based on specific columns.

To Remove Complete Row Duplicates:

[[See Video to Reveal this Text or Code Snippet]]

To Remove Partial Duplicates (Based on Specific Columns):

Specify which columns to check for duplicates:

[[See Video to Reveal this Text or Code Snippet]]

Here you can replace ['Date', 'Time', 'School'] with additional columns that are relevant for your dataset.

Save Your Cleaned Data:

Once the duplicates are removed, save the cleaned DataFrame back to a CSV file:

[[See Video to Reveal this Text or Code Snippet]]

Final Thoughts

Using pandas to manage your CSV files and remove duplicates is not only straightforward but also very efficient. By following the simple steps outlined above, you can ensure that your data remains clean and accurate, allowing you to focus on your analysis without the worry of duplicate entries.

Now that you know how to handle duplicate rows in CSV files, go ahead and apply this technique to your datasets! Happy coding!

---

Visit these links for original content and any more details, such as alternate solutions, latest updates/developments on topic, comments, revision history etc. For example, the original title of the Question was: How to remove duplicate/repeated rows in csv with python?

If anything seems off to you, please feel free to write me at vlogize [AT] gmail [DOT] com.

---

How to Easily Remove Duplicate Rows from CSV Files Using Python

When working with data, especially when scraping from the web or continually appending information to a CSV file, encountering duplicate or repeated rows is a common dilemma. Such duplicates can lead to inaccurate analyses and insights. In this guide, we will explore how to effectively remove these duplicates from CSV files using Python and a popular library called pandas.

The Problem: Handling Duplicate Data

Imagine you have scraped data from various websites, and while saving this data into a CSV file, you accidentally append some duplicate entries. This can create confusion later when analyzing the data or making important decisions based on it. The CSV file might look like this:

[[See Video to Reveal this Text or Code Snippet]]

Why Should You Remove Duplicates?

Accuracy: Having duplicates can skew your data analysis and lead to incorrect conclusions.

Efficiency: Smaller datasets without duplicates are typically easier to manage and analyze.

Clarity: Non-redundant data is simpler for others (and future you) to understand and utilize.

The Solution: Using pandas to Remove Duplicates

pandas is a powerful data manipulation library in Python that simplifies data reading, writing, and analysis. Here’s how you can use it to remove duplicate rows from a CSV file:

Step-by-Step Guide

Install pandas (if you haven't yet):

If you don't have pandas installed, you can do so using pip:

[[See Video to Reveal this Text or Code Snippet]]

Read Your CSV File:

Begin by loading your CSV file into a pandas DataFrame:

[[See Video to Reveal this Text or Code Snippet]]

Remove Duplicates:

There are two main ways to remove duplicates: completely identical rows or partial matches based on specific columns.

To Remove Complete Row Duplicates:

[[See Video to Reveal this Text or Code Snippet]]

To Remove Partial Duplicates (Based on Specific Columns):

Specify which columns to check for duplicates:

[[See Video to Reveal this Text or Code Snippet]]

Here you can replace ['Date', 'Time', 'School'] with additional columns that are relevant for your dataset.

Save Your Cleaned Data:

Once the duplicates are removed, save the cleaned DataFrame back to a CSV file:

[[See Video to Reveal this Text or Code Snippet]]

Final Thoughts

Using pandas to manage your CSV files and remove duplicates is not only straightforward but also very efficient. By following the simple steps outlined above, you can ensure that your data remains clean and accurate, allowing you to focus on your analysis without the worry of duplicate entries.

Now that you know how to handle duplicate rows in CSV files, go ahead and apply this technique to your datasets! Happy coding!

0:02:07

0:02:07

0:08:56

0:08:56

0:03:28

0:03:28

0:00:25

0:00:25

0:00:33

0:00:33

0:00:27

0:00:27

0:01:24

0:01:24

0:00:18

0:00:18

0:05:25

0:05:25

0:02:56

0:02:56

0:00:57

0:00:57

0:00:24

0:00:24

0:00:20

0:00:20

0:00:44

0:00:44

0:00:51

0:00:51

0:11:51

0:11:51

0:00:16

0:00:16

0:01:00

0:01:00

0:09:00

0:09:00

0:02:47

0:02:47

0:02:03

0:02:03

0:00:27

0:00:27

0:00:28

0:00:28

0:00:27

0:00:27