filmov

tv

AWS Tutorials - Working with Data Sources in AWS Glue Job

Показать описание

When writing AWS Glue ETL Job, the question rises whether to fetch data from the data source directly or fetch via the glue catalog of the data source. The video talks about why it is recommended to use data catalog. There is also a demo showing data access from S3, PostgreSQL and Redshift using Glue data catalog.

AWS In 5 Minutes | What Is AWS? | AWS Tutorial For Beginners | AWS Training | Simplilearn

Getting Started With AWS Cloud | Step-by-Step Guide

AWS Tutorial For Beginners | AWS Full Course - Learn AWS In 10 Hours | AWS Training | Edureka

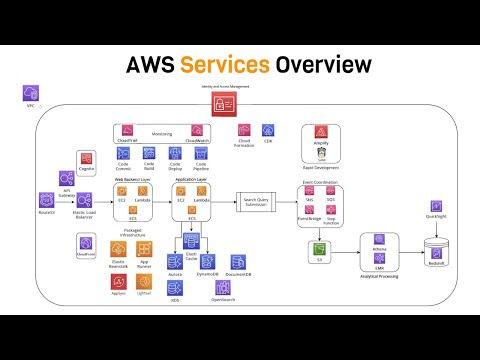

Top 50+ AWS Services Explained in 10 Minutes

AWS In 10 Minutes | AWS Tutorial For Beginners | AWS Cloud Computing For Beginners | Simplilearn

Amazon/AWS VPC (Virtual Private Cloud) Basics | VPC Tutorial | AWS for Beginners

Amazon/AWS EC2 (Elastic Compute Cloud) Basics | Create an EC2 Instance Tutorial |AWS for Beginners

What is AWS? AWS Cloud Computing for Beginners | Explained in Plain English

Understanding Security in Cloud, a core AWS Well-Architected Pillar

Simple Queue Service (SQS) Basics | AWS Cloud Computing Tutorial for Beginners

AWS IAM Core Concepts You NEED to Know

Beginners Tutorial to Terraform with AWS

AWS VPC Beginner to Pro - Virtual Private Cloud Tutorial

AWS EC2 Tutorial For Beginners | What Is AWS EC2? | AWS EC2 Tutorial | AWS Training | Simplilearn

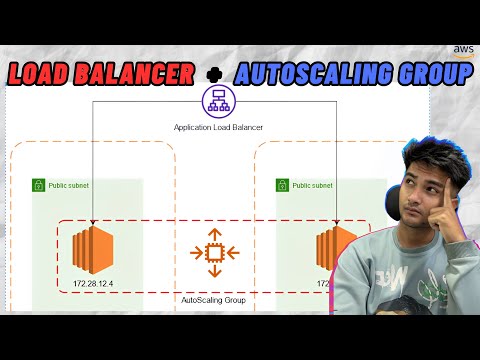

AWS Tutorial to create Application Load Balancer and Auto Scaling Group

Intro to AWS - The Most Important Services To Learn

What is Amazon Web Services? AWS Explained | Tutorial & Resources

Create a REST API with API Gateway and Lambda | AWS Cloud Computing Tutorials for Beginners

AWS Vs. Azure Vs. Google Cloud

🧑💻 Learn AWS as a beginner for free #aws #programming #amazon #tech #cloudcomputing #awstraining...

AWS Certified Cloud Practitioner Training 2020 - Full Course

AWS & Cloud Computing for beginners | 50 Services in 50 Minutes

🔥 AWS in 60 Seconds! 🔥#aws #shorts

How to learn AWS | AWS Tutorial For Beginners| #ytshorts #shortsvideo #learnaws

Комментарии

0:05:30

0:05:30

0:23:54

0:23:54

9:28:40

9:28:40

0:11:46

0:11:46

0:09:12

0:09:12

0:09:50

0:09:50

0:12:34

0:12:34

0:06:51

0:06:51

0:01:23

0:01:23

0:10:46

0:10:46

0:21:40

0:21:40

0:08:46

0:08:46

2:11:42

2:11:42

0:22:17

0:22:17

0:12:43

0:12:43

0:50:07

0:50:07

0:07:29

0:07:29

0:04:33

0:04:33

0:00:05

0:00:05

0:00:13

0:00:13

3:58:01

3:58:01

0:49:26

0:49:26

0:00:59

0:00:59

0:00:09

0:00:09