filmov

tv

Training Series: Create a Knowledge Graph: A Simple ML Approach

Показать описание

This talk will start with unstructured text and end with a knowledge graph in Neo4j using standard Python packages for Natural Language Processing. From there, we will explore what can be done with that knowledge graph using the tools available with the Graph Data Science Library.

Useful links:

Useful links:

Training Series - Create a Knowledge Graph: A Simple ML Approach

Training Series - Create a Knowledge Graph: A Simple ML Approach

Training Series: Create a Knowledge Graph: A Simple ML Approach

Training Series: Intro to Neo4j Graph Database

APAC Training Series - Knowledge Graphs with ChatGPT

Building Knowledge Graphs in 10 Steps

Training Series - Intro to Neo4j

Wheatstone bridge ITI practical classes #expriment

PowerPoint Just Got COOLER! #short

Top 5 Coding Languages You Need to Learn in 2024!#Coding2024#LearnToCode#TopProgrammingLanguages #ai

Brief Introduction To Knowledge Graph In NLP

Fundamentals of Nursing: Clinical Skills – Course Trailer | Lecturio Nursing

Build a Small Knowledge Graph Part 2 of 3: Managing Graph Data With Cayley

How to Make a Google Knowledge Graph | Step-by-step Tutorial

Build a Small Knowledge Graph Part 3 of 3: Activating Graph Data With Actions

5 - From Text to a Knowledge Graph The Information Extraction Pipeline

SketchUp Training Series: Tape Measure tool

Hidden Ques from Ncert | ft. Neet Topper | #neet2025 #pcmb

Outlook TOP Tip; Use Categories to Colour Code Your Calendar

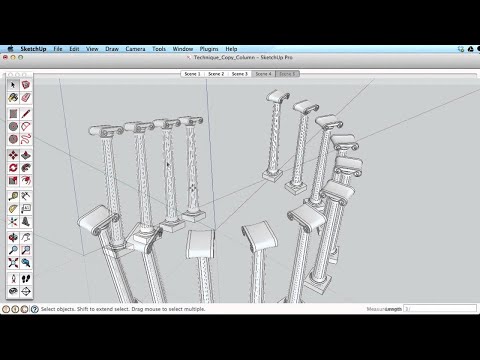

SketchUp Training Series: Copies and Arrays

Benefits of Audio and Video Based Learning for Fashion Product Knowledge Training yt

Capture Knowledge and Build Training Content from Slack

How To Master 5 Basic Cooking Skills | Gordon Ramsay

Cleaning Up Sections and Elevations - ARCHICAD Training Series 3 - 67/84

Комментарии

0:00:21

0:00:21

1:53:41

1:53:41

1:53:00

1:53:00

1:54:19

1:54:19

1:00:51

1:00:51

0:04:37

0:04:37

2:00:22

2:00:22

0:00:12

0:00:12

0:01:00

0:01:00

0:00:38

0:00:38

0:07:27

0:07:27

0:01:01

0:01:01

0:12:10

0:12:10

0:14:14

0:14:14

0:07:14

0:07:14

0:30:19

0:30:19

0:03:49

0:03:49

0:00:31

0:00:31

0:00:55

0:00:55

0:03:37

0:03:37

0:00:54

0:00:54

0:00:38

0:00:38

0:07:40

0:07:40

0:02:00

0:02:00