filmov

tv

GPT-4 Tutorial: How to Chat With Multiple PDF Files (~1000 pages of Tesla's 10-K Annual Reports)

Показать описание

In this video we'll learn how to use OpenAI's new GPT-4 api to 'chat' with and analyze multiple PDF files. In this case, I use three 10-k annual reports for Tesla (~1000 PDF pages)

OpenAI recently announced GPT-4 (it's most powerful AI) that can process up to 25,000 words – about eight times as many as GPT-3 – process images and handle much more nuanced instructions than GPT-3.5.

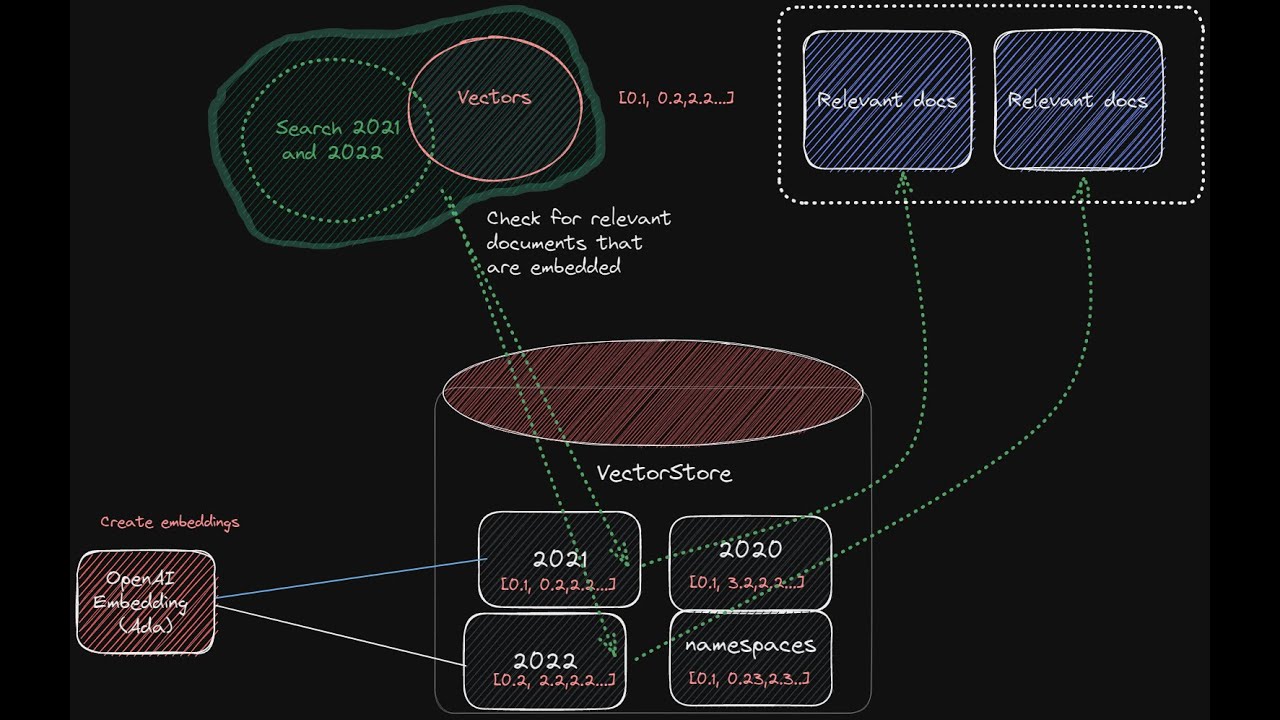

You'll learn how to use LangChain (a framework that makes it easier to assemble the components to build a chatbot) and Pinecone - a 'vectorstore' to store your documents in number 'vectors'. You'll also learn how to create a frontend chat interface to display the results alongside source documents.

A similar process can be applied to other usecases you want to build a chatbot for: PDF's, websites, excel, or other file formats.

Visuals & Code:

🖼 Visual guide download + github repo (this is the base template used for this demo):

Timestamps:

01:02 PDF demo (analysis of 1000-pages of annual reports)

06:01 Visual overview of the multiple pdf chatbot architecture

17:40 Code walkthrough pt.1

25:15 Pinecone dashboard

28:30 Code walkthrough pt.2

#gpt4 #investing #finance #stockmarket #stocks #trading #openai #langchain #chatgpt #langchainjavascript #langchaintypescript #langchaintutorial

OpenAI recently announced GPT-4 (it's most powerful AI) that can process up to 25,000 words – about eight times as many as GPT-3 – process images and handle much more nuanced instructions than GPT-3.5.

You'll learn how to use LangChain (a framework that makes it easier to assemble the components to build a chatbot) and Pinecone - a 'vectorstore' to store your documents in number 'vectors'. You'll also learn how to create a frontend chat interface to display the results alongside source documents.

A similar process can be applied to other usecases you want to build a chatbot for: PDF's, websites, excel, or other file formats.

Visuals & Code:

🖼 Visual guide download + github repo (this is the base template used for this demo):

Timestamps:

01:02 PDF demo (analysis of 1000-pages of annual reports)

06:01 Visual overview of the multiple pdf chatbot architecture

17:40 Code walkthrough pt.1

25:15 Pinecone dashboard

28:30 Code walkthrough pt.2

#gpt4 #investing #finance #stockmarket #stocks #trading #openai #langchain #chatgpt #langchainjavascript #langchaintypescript #langchaintutorial

Комментарии

0:11:00

0:11:00

0:20:22

0:20:22

0:13:54

0:13:54

0:27:51

0:27:51

0:21:42

0:21:42

0:04:28

0:04:28

0:21:32

0:21:32

0:09:08

0:09:08

0:01:57

0:01:57

0:11:42

0:11:42

0:56:00

0:56:00

0:34:05

0:34:05

0:20:26

0:20:26

0:12:12

0:12:12

0:29:14

0:29:14

0:29:47

0:29:47

0:28:13

0:28:13

0:07:50

0:07:50

0:00:41

0:00:41

0:08:08

0:08:08

0:11:59

0:11:59

0:10:09

0:10:09

0:09:19

0:09:19

0:27:32

0:27:32