filmov

tv

Python Tutorial : Feature engineering and overfitting

Показать описание

---

Feature engineering uses domain knowledge and common sense to describe an object with numbers. Although adding more features can improve performance, it can also increase the risk of overfitting. In this lesson, you will learn more about this interesting trade-off.

Sometimes, the raw data can not fit into the form of a table. For example, consider electrocardiogram (or ECG) traces for a number of individuals. Each ECG trace is a time series, possibly of variable length, that cannot fit in one cell of a table.

Instead, in the dataset shown here experts extracted over 250 one-dimensional numerical summaries from each ECG. These range from simple summaries like heart-rate to very complex properties of the signal with weird names like T-wave-amp, all of which can be useful in detecting a medical condition known as arrhythmia.

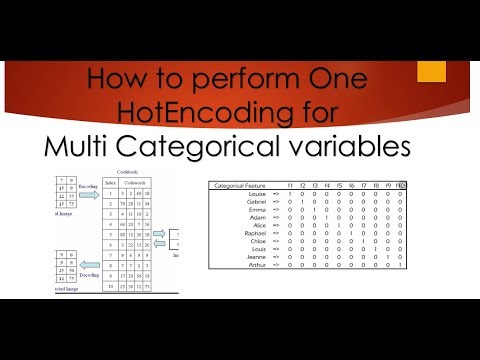

Even if the data are tabular, some of the columns might be non-numeric. Here is an example from the credit scoring dataset: the purpose of the loan takes values such as "buy a new car", "education" or "retraining". LabelEncoder will map these values onto a range of numbers.

But the classifier is then confused. It thinks that the categories have a natural ordering. For example, a decision tree might try to split the range in two. If it splits at 4, it is putting loans for business together with loans for a microwave oven!

A different approach is to use one-hot-encoding, implemented by the .get_dummies() pandas method. This creates one new dummy variable for each category, taking the value 1 for each example that falls in that category and 0 otherwise. You can see the first row of the data on the left, printed vertically for readability. No artificial ordering is introduced.

How about capturing semantic similarity? Notice that similar categories share keywords: for example, all consumer loans feature the keyword "buy". You can count common keywords using CountVectorizer from the feature_extraction module.

First, replace underscores with spaces for easier tokenization.

Then, apply the encoder using its .fit_transform() method.

Finally, convert the resulting matrix to a DataFrame, naming the columns using the .get_feature_names() method of the CountVectorizer object.

Note that as we improve our feature engineering pipeline, the dimension of our DataFrame increases! The question arises: how many features is too many?

Well, with more columns, the algorithm has more opportunity to mistake coincidental patterns for real signal. We can test this by adding columns to the data containing purely random numbers totally unrelated to the class. As we add more columns on the horizontal axis, overfitting kicks in! Accuracy improves in-sample but deteriorates out-of-sample.

A popular solution is to add features freely, and then select the "best" ones using some feature selection technique.

Let's try the trick from the previous slide, and augment the credit scoring dataset with 100 fake variables.

Then, we use the SelectKBest algorithm from the feature_selector module to select the 20 highest-scoring columns. We use the chi2 scoring method. The feature selector has a .fit() method to fit it to the data, and a .get_support() method that returns the index of the selected columns.

Thankfully, only a handful of fake columns remain in the selected features.

So remember this: every decision you make in your pipeline might affect other aspects of it, and in particular the risk of overfitting. The following exercises confirm this insight.

#DataCamp #PythonTutorial #DesigningMachineLearningWorkflowsinPython

0:05:12

0:05:12

0:47:58

0:47:58

0:22:23

0:22:23

0:30:04

0:30:04

0:07:38

0:07:38

0:14:19

0:14:19

1:11:10

1:11:10

2:48:55

2:48:55

3:06:22

3:06:22

0:23:28

0:23:28

0:08:37

0:08:37

0:40:22

0:40:22

0:03:27

0:03:27

0:15:32

0:15:32

0:24:52

0:24:52

0:23:09

0:23:09

0:08:54

0:08:54

1:04:47

1:04:47

0:22:39

0:22:39

0:18:41

0:18:41

0:17:18

0:17:18

0:00:47

0:00:47

0:06:51

0:06:51

0:12:32

0:12:32