filmov

tv

LLM Evals - Part 1: Evaluating Performance

Показать описание

OTHER TRELIS LINKS:

TIMESTAMPS:

00:00 Introduction to LLM Evaluation

03:21 Understanding Evaluation Pipelines

09:56 Building a Demo Application

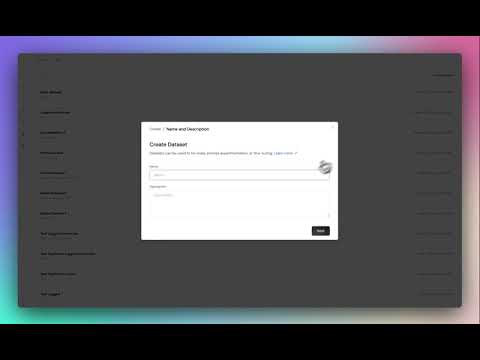

15:21 Creating Evaluation Datasets

23:52 Practical Evaluation Task / Question Development

27:40 Running and Analyzing Evaluations

30:24 Comparing LLM Model Performance using Evals

34:09 Conclusion and Next Steps

LLM Evals - Part 1: Evaluating Performance

Why Evals Matter | LangSmith Evaluations - Part 1

LLM Evals and LLM as a Judge: Fundamentals

LLM Eval Office Hours #1: Multi-Turn Chat Evals

Evals Are Important #programming #coding #developerlife #llm #ai #evaluations

How to run LLM evals with no code | PRACTICE

Building LLM Evals From Scratch

Part 1: Introduction and evaluations of LLMs for data extraction

How to measure LLM writing quality when there is no right answer?

What are Evals?

Evaluating LLM-based Applications

Welcome to the LLM evaluation course

Create a dataset and run custom LLM evaluations in 1 minute

LLM-Evals und LLM als Richter: Grundlagen

The Mother of LLM Jailbreaks is Here!

LLM Evaluation Basics: Datasets & Metrics

LLM Evaluation Essentials: Statistical Analysis of Hallucination LLM Evaluations

DONT DO LLM! RL AS LAST RESORT | Yann LeCun #fyp #chatgpt #llm #ai #deeplearning #machinelearning

How to Construct Domain Specific LLM Evaluation Systems: Hamel Husain and Emil Sedgh

A Gentle Introduction to LLM Evaluations - Elena Samuylova

LLM System Design and AI Evals - Product Manager Mock Interview

LLM evaluation benchmarks

Deepchecks LLM Evaluation | Product Overview

How to set up real-time LLM evaluations with LangWatch

Комментарии

0:34:23

0:34:23

0:06:45

0:06:45

0:09:54

0:09:54

0:20:33

0:20:33

0:00:13

0:00:13

0:12:27

0:12:27

0:28:37

0:28:37

0:20:16

0:20:16

0:10:13

0:10:13

0:00:59

0:00:59

0:33:50

0:33:50

0:01:32

0:01:32

0:01:07

0:01:07

0:10:31

0:10:31

0:00:55

0:00:55

0:05:18

0:05:18

0:50:54

0:50:54

0:00:20

0:00:20

0:18:45

0:18:45

1:01:19

1:01:19

0:22:01

0:22:01

0:03:07

0:03:07

0:01:30

0:01:30

0:03:21

0:03:21