filmov

tv

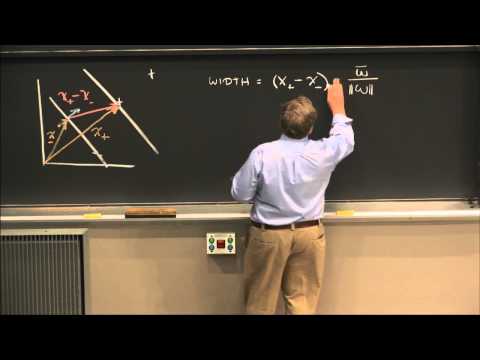

ML Teach by Doing Lecture 7: Linear Classifiers Part 2

Показать описание

Welcome to Lecture 7 of Machine Learning: Teach by Doing project.

In this lecture, we run our first ML algorithm: The Random Linear Classifier. We learn about hyperparameters, cross validation and many more exciting concepts!

0:00 Introduction

5:20 Random Linear Classifier algorithm

11:40 Parameters vs Hyperparameters

14:47 Result validation

22:47 Cross validation

31:45 6 ML steps recap

29:00 Loss function

34:15 Conclusion

(a) Notes and PPT PDF which was shown in the video:

=================================================

Machine Learning: Teach by Doing is a project started by the co-founders of Vizuara: Dr. Raj Dandekar (IIT Madras Btech, MIT PhD), Dr. Rajat Dandekar (IIT Madras Mtech, Purdue PhD) and Dr. Sreedath Panat (IIT Madras Mtech, MIT PhD).

In 2018, Dr. Raj Dandekar attended his first ML lecture at MIT and it transformed his life. The next four years: He mastered ML, published ML research, did ML internships and corporate jobs, and finally obtained his ML PhD from MIT.

Machine Learning: Teach by Doing is not a normal video course. In this project, we will begin learning ML from scratch, along with you. Everyday, we will post what we learnt the previous day. We will make lecture notes, and also share reference material.

As we learn the material again, we will share thoughts on what is actually useful in industry and what has become irrelevant. We will also share a lot of information on which subject contains open areas of research. Interested students can also start their research journey there.

Students who are confused or stuck in their ML journey, maybe courses and offline videos are not inspiring enough. What might inspire you is if you see someone else learning machine learning from scratch.

No cost. No hidden charges. Pure old school teaching and learning.

=================================================

🌟 Meet Our Team: 🌟

🎓 Dr. Raj Dandekar (MIT PhD, IIT Madras department topper)

🎓 Dr. Rajat Dandekar (Purdue PhD, IIT Madras department gold medalist)

🎓 Dr. Sreedath Panat (MIT PhD, IIT Madras department gold medalist)

In this lecture, we run our first ML algorithm: The Random Linear Classifier. We learn about hyperparameters, cross validation and many more exciting concepts!

0:00 Introduction

5:20 Random Linear Classifier algorithm

11:40 Parameters vs Hyperparameters

14:47 Result validation

22:47 Cross validation

31:45 6 ML steps recap

29:00 Loss function

34:15 Conclusion

(a) Notes and PPT PDF which was shown in the video:

=================================================

Machine Learning: Teach by Doing is a project started by the co-founders of Vizuara: Dr. Raj Dandekar (IIT Madras Btech, MIT PhD), Dr. Rajat Dandekar (IIT Madras Mtech, Purdue PhD) and Dr. Sreedath Panat (IIT Madras Mtech, MIT PhD).

In 2018, Dr. Raj Dandekar attended his first ML lecture at MIT and it transformed his life. The next four years: He mastered ML, published ML research, did ML internships and corporate jobs, and finally obtained his ML PhD from MIT.

Machine Learning: Teach by Doing is not a normal video course. In this project, we will begin learning ML from scratch, along with you. Everyday, we will post what we learnt the previous day. We will make lecture notes, and also share reference material.

As we learn the material again, we will share thoughts on what is actually useful in industry and what has become irrelevant. We will also share a lot of information on which subject contains open areas of research. Interested students can also start their research journey there.

Students who are confused or stuck in their ML journey, maybe courses and offline videos are not inspiring enough. What might inspire you is if you see someone else learning machine learning from scratch.

No cost. No hidden charges. Pure old school teaching and learning.

=================================================

🌟 Meet Our Team: 🌟

🎓 Dr. Raj Dandekar (MIT PhD, IIT Madras department topper)

🎓 Dr. Rajat Dandekar (Purdue PhD, IIT Madras department gold medalist)

🎓 Dr. Sreedath Panat (MIT PhD, IIT Madras department gold medalist)

Комментарии

0:07:52

0:07:52

0:53:34

0:53:34

0:38:57

0:38:57

0:49:43

0:49:43

0:18:40

0:18:40

0:14:59

0:14:59

9:38:32

9:38:32

9:52:19

9:52:19

0:53:13

0:53:13

0:38:16

0:38:16

0:05:28

0:05:28

1:18:44

1:18:44

0:58:14

0:58:14

1:21:25

1:21:25

0:46:02

0:46:02

0:46:31

0:46:31

0:49:34

0:49:34

0:07:50

0:07:50

4:52:51

4:52:51

0:05:52

0:05:52

0:57:56

0:57:56

1:29:36

1:29:36

1:00:06

1:00:06

0:31:48

0:31:48