filmov

tv

K Nearest Neighbor | KNN | sklearn KNeighborsClassifier

Показать описание

In this presentation, we have shown the KNN classification of the Iris dataset to classify the targeted output for a new instance.

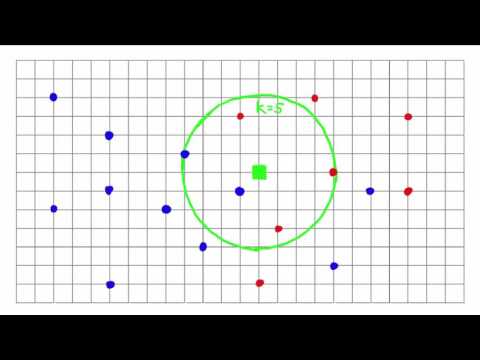

K Nearest Neighbour is a simple way to classify data. K defines the number of nearest neighbors (individual data points). If we put a new instance in the data set to find the category the new instance matches with, we calculate its nearest neighbors. The most amount of neighbor the data point is close to, the instance falls into that category.

Here we have shown here:

[00:00:00] - Intro

[00:02:28] - KNN Algorithm

[00:03:00] - Sci-Kit Learn KNeighborsClassifier

[00:03:36] - Implementation of KNN

[00:06:03] - Confusion Matrix

[00:07:04] - Classification Report

[00:08:01] - How to find the best value for K

[00:09:00] - K-Fold Cross-Validation Technique

#DataScience #MachineLearning #ComputerScience #AI

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

K Nearest Neighbour is a simple way to classify data. K defines the number of nearest neighbors (individual data points). If we put a new instance in the data set to find the category the new instance matches with, we calculate its nearest neighbors. The most amount of neighbor the data point is close to, the instance falls into that category.

Here we have shown here:

[00:00:00] - Intro

[00:02:28] - KNN Algorithm

[00:03:00] - Sci-Kit Learn KNeighborsClassifier

[00:03:36] - Implementation of KNN

[00:06:03] - Confusion Matrix

[00:07:04] - Classification Report

[00:08:01] - How to find the best value for K

[00:09:00] - K-Fold Cross-Validation Technique

#DataScience #MachineLearning #ComputerScience #AI

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

K Nearest Neighbors | Intuitive explained | Machine Learning Basics

What is the K-Nearest Neighbor (KNN) Algorithm?

StatQuest: K-nearest neighbors, Clearly Explained

Simple Explanation of the K-Nearest Neighbors (KNN) Algorithm

KNN Algorithm In Machine Learning | KNN Algorithm Using Python | K Nearest Neighbor | Simplilearn

Machine Learning | K-Nearest Neighbor (KNN)

How kNN algorithm works

Machine Learning Tutorial Python - 18: K nearest neighbors classification with python code

Simplifying K-Nearest Neighbors

#48 K- Nearest Neighbour Algorithm ( KNN ) - With Example |ML|

k nearest neighbor (kNN): how it works

O KNN (K-Nearest Neighbors) - Algoritmos de Aprendizado de Máquinas

1. Solved Numerical Example of KNN Classifier to classify New Instance IRIS Example by Mahesh Huddar

K nearest neighbors (KNN) - explained | validation

Lec-7: kNN Classification with Real Life Example | Movie Imdb Example | Supervised Learning

7.5.1. K-Nearest Neighbors (KNN) - intuition

ML 21 : K-Nearest Neighbor (KNN) Algorithm Working with Solved Examples

KNN Algorithm in Machine Learning | K Nearest Neighbor | KNN Algorithm Example |Tutorialspoint

How to implement KNN from scratch with Python

K nearest neighbor

K-Nearest Neighbor

k-nearest-neighbour KNN

What Is The Difference Between KNN and K-means?

Solved Example K Nearest Neighbors Algorithm Weighted KNN to classify New Instance by Mahesh Huddar

Комментарии

0:02:13

0:02:13

0:08:01

0:08:01

0:05:30

0:05:30

0:01:34

0:01:34

0:27:43

0:27:43

0:08:30

0:08:30

0:04:42

0:04:42

0:15:42

0:15:42

0:00:59

0:00:59

0:10:06

0:10:06

0:09:05

0:09:05

0:30:49

0:30:49

0:06:30

0:06:30

0:15:38

0:15:38

0:10:13

0:10:13

0:17:42

0:17:42

0:15:30

0:15:30

0:03:48

0:03:48

0:09:24

0:09:24

0:00:46

0:00:46

0:07:13

0:07:13

0:06:15

0:06:15

0:05:57

0:05:57

0:06:11

0:06:11