filmov

tv

Apple M3, M3 Pro & M3 Max — Chip Analysis

Показать описание

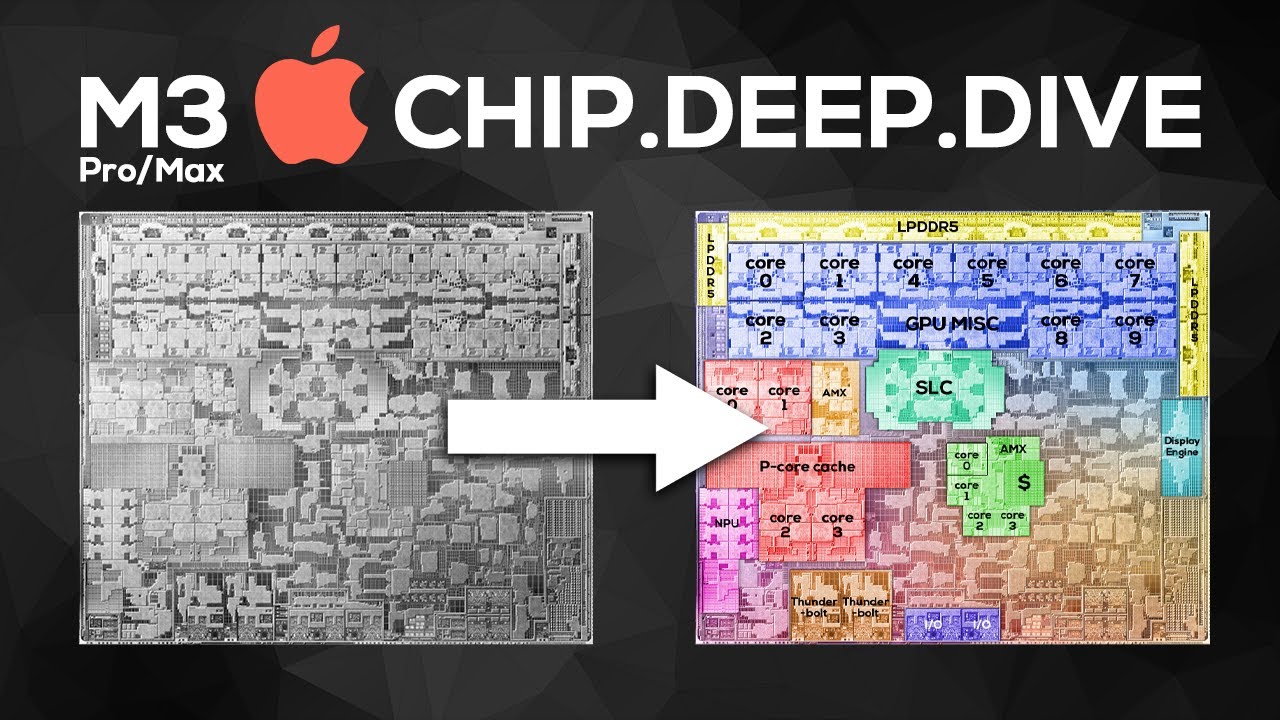

In-depth analysis of Apple's new 3nm chips: M3, M3 Pro and M3 Max. Silicon deep-dive, die-shot analysis and a closer look at CPU, GPU, NPU and the TSMC N3B process node.

0:00 Intro

0:47 M3 Silicon Analysis

4:17 M3 Pro Silicon Analysis

6:26 M3 Max Silicon Analysis

8:25 Why is the M3 Pro a downgrade?

10:47 NPU deep-dive

12:16 CPU deep-dive

13:17 GPU deep-dive

14:44 GPU architecture / Apple family 9 GPU

16:22 TSMC N3B Process Node

18:44 Wrap-up

0:00 Intro

0:47 M3 Silicon Analysis

4:17 M3 Pro Silicon Analysis

6:26 M3 Max Silicon Analysis

8:25 Why is the M3 Pro a downgrade?

10:47 NPU deep-dive

12:16 CPU deep-dive

13:17 GPU deep-dive

14:44 GPU architecture / Apple family 9 GPU

16:22 TSMC N3B Process Node

18:44 Wrap-up

M3 vs M3 Pro MacBook Pro after 1 Month - WERE WE WRONG?!

Space Black M3 Max MacBook Pro Review: We Can Game Now?!

M3 vs M3 Pro vs M3 Max - New MacBook Pro Buyer's Guide!

M3 Pro MacBook Pro after 3 Months - Why EVERYONE Was Wrong!

Apple M3 Deep Dive: The Details Most Skipped Over

MacBook Pro M3 (14 & 16): You're Being Misled

Apple M3, M3 Pro & M3 Max — Chip Analysis

M3 vs M3 Pro vs M3 Max - FINAL Performance Comparison!

Best MacBook for Programming: Programmer's Guide to Choosing the Perfect Mac

16” M3 MacBook Pro 6 MONTHS LATER - Real Day in the Life

Why the M3 MacBook Air is PERFECT!

🤯 SURPRISING M3 MacBook Coding Performance

M3 Pro vs M1 Pro MacBook Pro - Worth The Upgrade?

M3 VS M3 Pro 14' MacBook Pro (Late-2023)! What's Different & Which to Buy!?

M3 MacBook Pro Review - 1 Year Later!

Apple MacBook Pro M3 Pro Vs. M3 Max - FCPX

Macbook Pro (M3 Pro) - Perfection.

Why I LOVE the M3 Max MacBook Pro!

You SHOULD NOT Buy the M3 Mac for Music - Here’s why

M3 MacBook Pro VS M3 PRO!

The ONLY M3 MacBook Model You Should Buy!

MacBook Pro UNBOXING and setup *space black, m3 pro chip* 💻🖤

MacBook Pro 14' M3 Base Model - DON'T BE FOOLED!

M3 Max MacBook Pro 6 Months Later - Wow 👀

Комментарии

0:09:59

0:09:59

0:08:56

0:08:56

0:12:20

0:12:20

0:09:32

0:09:32

0:11:44

0:11:44

0:13:35

0:13:35

0:20:13

0:20:13

0:09:46

0:09:46

0:06:05

0:06:05

0:11:39

0:11:39

0:09:13

0:09:13

0:08:17

0:08:17

0:08:18

0:08:18

0:04:37

0:04:37

0:14:30

0:14:30

0:05:48

0:05:48

0:07:14

0:07:14

0:08:03

0:08:03

0:08:04

0:08:04

0:11:44

0:11:44

0:08:56

0:08:56

0:08:32

0:08:32

0:10:19

0:10:19

0:12:00

0:12:00