filmov

tv

Understanding AI - Lesson 2 / 15: Hidden Layers

Показать описание

Dive deeper into the world of Neural Networks with Lesson 2 of the "Understanding AI" course! In this session, we explore how a simple genetic algorithm helps optimize network parameters. We'll also see the power of hidden layers and their role in shaping the behavior of neural networks. Join me as we move beyond single-input neurons and venture into the realm of multi-layer perceptrons!

Discover the significance of hidden neurons and nodes, understanding why they're termed "hidden". Gain insights into the terminology and we'll also debunk common misconceptions about activation functions.

Join me on this learning journey! 🚀🧠

🚗THE PLAYGROUND🚗

💬DISCORD💬

⭐LINKS⭐

#HiddenLayers #NeuralNetworks #AIPlayground #MachineLearning #Perceptron #ActivationFunctions #ScikitLearn #AIProgression

⭐TIMESTAMPS⭐

00:00 Introduction

00:45 Genetic Algorithm

07:03 What the Network Really Learns

11:40 Two Inputs

31:08 Hidden Layers

38:37 Boolean Operations

41:22 Homework 3

41:32 Misconceptions

42:32 Homework 4

What Is AI? | Artificial Intelligence | What is Artificial Intelligence? | AI In 5 Mins |Simplilearn

Artificial intelligence explained in 2 minutes: What exactly is AI?

What is Artificial Intelligence? | ChatGPT | The Dr Binocs Show | Peekaboo Kidz

Lesson 2: Practical Deep Learning for Coders 2022

What is Artificial Intelligence? | Artificial Intelligence In 5 Minutes | AI Explained | Simplilearn

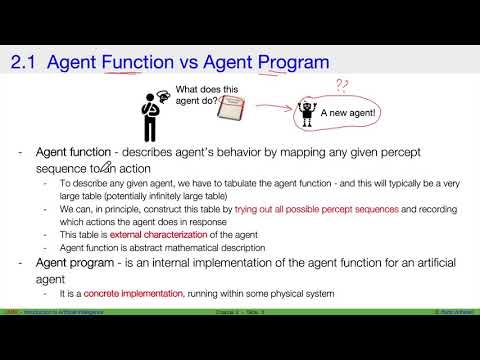

Lecture 2 | AI Free Basic Course

Lesson 2: Deep Learning 2018

How China Is Using Artificial Intelligence in Classrooms | WSJ

The Void - S01E02C02 - Who The Hell Is Buck Rogers - Chapter 2

AI - Ch02 - Intelligent Agents

AI vs Machine Learning

Best 12 AI Tools in 2023

Lesson 2 - Deep Learning for Coders (2020)

Artificial Intelligence Class 9 Unit 2 | AI Project Cycle - Overview

Introduction To Artificial Intelligence | What Is AI?| Artificial Intelligence Tutorial |Simplilearn

How is artificial intelligence (AI) used in education?

Class 9,10 Artificial Intelligence | Unit 2 | AI Project Cycle

Introduction to AI + Basics of AI Class 10 417 | AI Unit 1 & 2 🔥| CBSE 2023 | One Shot

What is AI? - Artificial Intelligence Facts for Kid

How much does an AI ENGINEER make?

A 2-Minute Intro to AI Make Documentation

How AI Could Save (Not Destroy) Education | Sal Khan | TED

Introduction to Generative AI

Artificial Intelligence Class 10 Unit 2 | AI Project Cycle - Overview 2022-23

Комментарии

0:05:28

0:05:28

0:02:24

0:02:24

0:05:42

0:05:42

1:16:42

1:16:42

0:04:45

0:04:45

1:54:27

1:54:27

2:07:30

2:07:30

0:05:44

0:05:44

0:09:48

0:09:48

0:34:45

0:34:45

0:05:49

0:05:49

0:00:36

0:00:36

1:31:05

1:31:05

0:36:43

0:36:43

0:19:14

0:19:14

0:04:34

0:04:34

0:08:10

0:08:10

0:34:29

0:34:29

0:03:08

0:03:08

0:00:36

0:00:36

0:00:59

0:00:59

0:15:37

0:15:37

0:22:08

0:22:08

0:38:16

0:38:16