filmov

tv

Deep Learning with Tensorflow - Quantization Aware Training

Показать описание

#tensorflow #machinelearning #deeplearning

Quantization aware training (QAT) allows for reduced precision representations of weight. In QAT model is quantized as part of training process itself as against post model quantization

quantization aware training is often better for model accuracy than post quantization training.

quantization allows inference to be carried out using integer-only arithmetic, which can be implemented more efficiently than floating point inference on commonly available integer-only hardware

Quantization aware training (QAT) allows for reduced precision representations of weight. In QAT model is quantized as part of training process itself as against post model quantization

quantization aware training is often better for model accuracy than post quantization training.

quantization allows inference to be carried out using integer-only arithmetic, which can be implemented more efficiently than floating point inference on commonly available integer-only hardware

TensorFlow in 100 Seconds

Keras with TensorFlow Course - Python Deep Learning and Neural Networks for Beginners Tutorial

Learn TensorFlow and Deep Learning fundamentals with Python (code-first introduction) Part 1/2

Tensorflow Tutorial for Python in 10 Minutes

TensorFlow 2.0 Complete Course - Python Neural Networks for Beginners Tutorial

What is TensorFlow | TensorFlow Explained in 3-Minutes | Introduction to TensorFlow | Intellipaat

PyTorch or Tensorflow? Which Should YOU Learn!

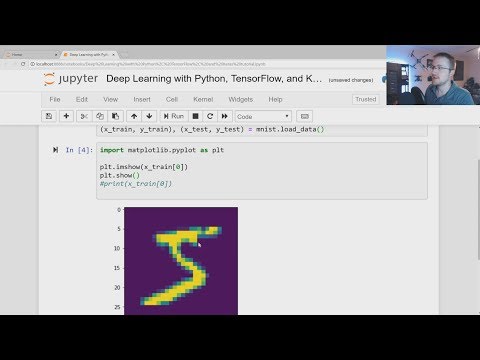

Deep Learning with Python, TensorFlow, and Keras tutorial

#DevCoach 161: Machine Learning in Google Cloud | Pengenalan TensorFlow dan Ekosistemnya

PyTorch vs TensorFlow | Ishan Misra and Lex Fridman

Deep Learning for Computer Vision with Python and TensorFlow – Complete Course

Python TensorFlow for Machine Learning – Neural Network Text Classification Tutorial

What is TensorFlow?

But what is a neural network? | Chapter 1, Deep learning

PyTorch in 100 Seconds

Pytorch vs TensorFlow vs Keras | Which is Better | Deep Learning Frameworks Comparison | Simplilearn

Pytorch vs Tensorflow vs Keras | Deep Learning Tutorial 6 (Tensorflow Tutorial, Keras & Python)

How to Build a Neural Network with TensorFlow and Keras in 10 Minutes

Intro to Machine Learning (ML Zero to Hero - Part 1)

Introduction | Deep Learning Tutorial 1 (Tensorflow Tutorial, Keras & Python)

TensorFlow 2.0 Tutorial For Beginners | TensorFlow Demo | Deep Learning & TensorFlow | Simplilea...

Deep Learning Tutorial | Deep Learning TensorFlow | Deep Learning With Neural Networks | Simplilearn

TensorFlow In 10 Minutes | TensorFlow Tutorial For Beginners | TensorFlow Explained | Simplilearn

Machine Learning on Arduino with TensorFlow Lite Micro

Комментарии

0:02:39

0:02:39

2:47:55

2:47:55

10:15:28

10:15:28

0:11:33

0:11:33

6:52:08

6:52:08

0:02:36

0:02:36

0:00:36

0:00:36

0:20:34

0:20:34

1:42:07

1:42:07

0:03:47

0:03:47

13:16:41

13:16:41

1:54:11

1:54:11

0:04:20

0:04:20

0:18:40

0:18:40

0:02:43

0:02:43

0:14:14

0:14:14

0:02:17

0:02:17

0:13:46

0:13:46

0:07:18

0:07:18

0:03:39

0:03:39

1:26:37

1:26:37

1:10:51

1:10:51

0:11:48

0:11:48

0:00:17

0:00:17