filmov

tv

Neural networks [7.9] : Deep learning - DBN pre-training

Показать описание

But what is a neural network? | Chapter 1, Deep learning

Neural Network In 5 Minutes | What Is A Neural Network? | How Neural Networks Work | Simplilearn

Explained In A Minute: Neural Networks

Recurrent Neural Networks - Ep. 9 (Deep Learning SIMPLIFIED)

Deep Learning | What is Deep Learning? | Deep Learning Tutorial For Beginners | 2023 | Simplilearn

Why Do Tree Based-Models Outperform Neural Nets on Tabular Data?

Simple explanation of convolutional neural network | Deep Learning Tutorial 23 (Tensorflow & Pyt...

Neural Networks Pt. 2: Backpropagation Main Ideas

Deep Learning chapter 2 part 3: Training Deep Neural Networks | Pretraining, Momentum, Adagrad Etc

Recurrent Neural Network | Lecture 9 | Deep Learning

How Does a Neural Network Work in 60 seconds? The BRAIN of an AI

Tutorial 9- Drop Out Layers in Multi Neural Network

Deep Dream Video- Unconventional Neural Networks p.9

Introduction to Deep Learning (I2DL 2023) - 9. Convolutional Neural Networks

An Introduction to Machine Learning: Deep Learning for Sequential Inputs (7/9)

Artificial neural networks (ANN) - explained super simple

Lecture 7 | Training Neural Networks II

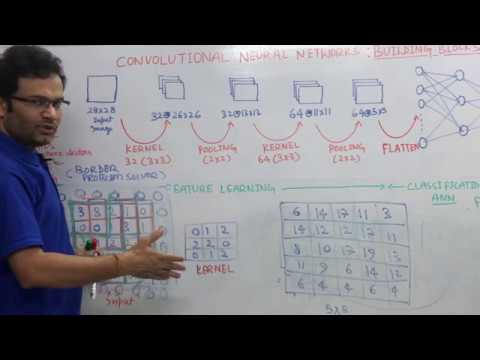

Convolutional Neural Networks | CNN | Kernel | Stride | Padding | Pooling | Flatten | Formula

What's a Neural Network?

Neural Network For Handwritten Digits Classification | Deep Learning Tutorial 7 (Tensorflow2.0)

Deep Dream - Unconventional Neural Networks p.7

[Neural Network 7] Backpropagation Demystified: A Step-by-Step Guide to the Heart of Neural Networks

What happens *inside* a neural network?

Forward Propagation and Backward Propagation | Neural Networks | How to train Neural Networks

Комментарии

0:18:40

0:18:40

0:05:45

0:05:45

0:01:04

0:01:04

0:05:21

0:05:21

0:05:52

0:05:52

0:00:58

0:00:58

0:23:54

0:23:54

0:17:34

0:17:34

0:44:34

0:44:34

1:11:04

1:11:04

0:01:00

0:01:00

0:11:31

0:11:31

0:08:57

0:08:57

1:07:56

1:07:56

0:17:05

0:17:05

0:26:14

0:26:14

1:15:30

1:15:30

0:21:32

0:21:32

0:00:44

0:00:44

0:36:39

0:36:39

0:12:48

0:12:48

![[Neural Network 7]](https://i.ytimg.com/vi/QflXxNfMCKo/hqdefault.jpg) 0:12:26

0:12:26

0:14:16

0:14:16

0:10:06

0:10:06