filmov

tv

8-Step Turbo-Powered Flux Image Editing & Inpainting in ComfyUI!

Показать описание

Three new things this week with the release of two models from AliMamma along with some Rectified Flow Inversion (Unsampling from RF Inversion)!

Still using more than 8 steps and waiting minutes for your results? Slap the power of turbo in for extra fast image generation using Flux AND it works with RF inversion for amazing image edits! What are you waiting for? Not Turbo, that’s for sure 😉

Quickly and easily style any image. Turn anime images into realistic ones, or realistic images into cartoons… or 3d models, or wood, or… RF inversion is super versatile!

Nb. With recent ComfyUI updates, centre no longer centres and instead has become fit to view, but hey 🫤

Want to help support the channel?

== Beginners Guides! ==

== More Flux.1 ==

Still using more than 8 steps and waiting minutes for your results? Slap the power of turbo in for extra fast image generation using Flux AND it works with RF inversion for amazing image edits! What are you waiting for? Not Turbo, that’s for sure 😉

Quickly and easily style any image. Turn anime images into realistic ones, or realistic images into cartoons… or 3d models, or wood, or… RF inversion is super versatile!

Nb. With recent ComfyUI updates, centre no longer centres and instead has become fit to view, but hey 🫤

Want to help support the channel?

== Beginners Guides! ==

== More Flux.1 ==

8-Step Turbo-Powered Flux Image Editing & Inpainting in ComfyUI!

FASTEST Way to Get FULL Quality in 8 Seconds - FLUX TURBO Lora!

How to Unlock Faster Image Generation with Hyper Flux Lora! Easy 8-Step Method Revealed!

Flux.1 ControlNet Union + Hyper-SD 8-Step Performance in ComfyUI!

My finger hurts so much, oh my god 😭 #youtubeshorts #makeup #sfx #sfx_makeup #foryou #art #shorts

💥The best Flux openpose workflow in ComfyUi - with main Flux.1 Dev 23G

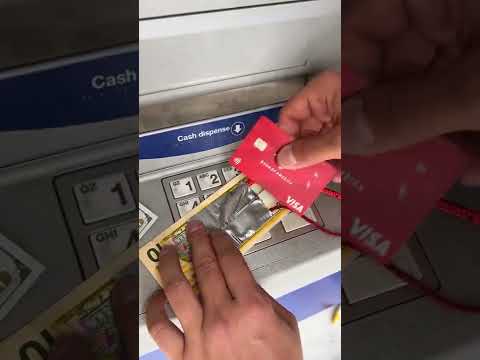

He made a trick in the atm #shorts

UPDATE: The POWER of Droplets and the Annoying SAVE #99

How To Use OpenArt To Generate And Edit STUNNING AI Images

Krea AI Tutorial - 2025 | New Updates | How to Use Krea AI - For Beginners

💥 FLUX 1.1 pro : The Best Way To Use It Free !

Naruto saying Sasuke in different languages🥶 #naruto #sasuke #anime #ytshorts #shorts

Deep dive into the Flux

AI-Based Batch Portrait Workflow

Cybertruck that transforms?! #shorts #tesla #cybertruck

ComfyUI Tutorial Series Ep17 Flux LoRA Explained! Best Settings & New UI

Better Flux Inpainting with Area Cropping (Workflow Included)

Elle Était Coincée Donc Ce Policier L'aide

Creating mystical landscapes with Luminar AI

Ptdr Ad Laurent c’est une dinguerie 🤣🤣 #poupettemytho #Allan #adlaurent #mym #onlyfan #coco

Straight up the Chute! #SandHollow #Jeep #Offroad #Shorts

How to override easily in 10 sec speed limit of electric bike pedal assist(cube with bosh engine)

final year diploma engineering project #viral #mechanical

Luminar Coffee Break: Using AI to Create multiple versions of an image

Комментарии

0:11:07

0:11:07

0:10:09

0:10:09

0:08:22

0:08:22

0:15:30

0:15:30

0:00:19

0:00:19

0:19:07

0:19:07

0:01:00

0:01:00

0:06:12

0:06:12

0:08:23

0:08:23

0:17:07

0:17:07

0:05:17

0:05:17

0:00:15

0:00:15

0:28:03

0:28:03

0:23:18

0:23:18

0:00:11

0:00:11

0:21:33

0:21:33

0:10:35

0:10:35

0:00:24

0:00:24

0:16:42

0:16:42

0:00:10

0:00:10

0:00:16

0:00:16

0:01:01

0:01:01

0:00:43

0:00:43

0:18:51

0:18:51